Hello and thanks in advance!

I have local home FreeNAS server with ZFS pools on and a couple of 4Tb hard drives connected. Didn't use mirroring, shame on me.

One of the drives partially died. I mean, gpart gives me partitions, but I get IO error when try to mount it.

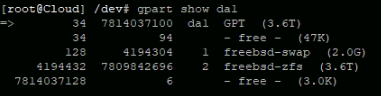

So now I have an image of the drive and partitioning from the old drive, this is it:

i mount an image and it 3 GB lower, then original drive, but it is OK. But image itself has no partitioning and testdisk can't see the image (used mdconfig command)

so i tried to set the same partitioning as the original drive with gpart create-add. Pool still don't want to mount.

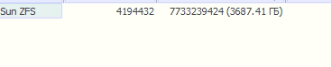

I run restoration program on the image and found ZFS partition with same initial offset:

but with that program I can restore only files itself, without the folders tree (and there are tons of them).

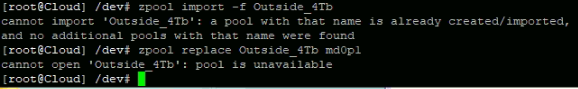

When i try to mount the pool, it shows me that:

And the pool state is UNAVAL.

What can i do to try restore folder tree of that disk?

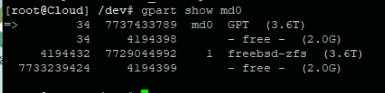

partitioning of md0:

Thanks in advance again and i swear to use mirroring futher!

I have local home FreeNAS server with ZFS pools on and a couple of 4Tb hard drives connected. Didn't use mirroring, shame on me.

One of the drives partially died. I mean, gpart gives me partitions, but I get IO error when try to mount it.

So now I have an image of the drive and partitioning from the old drive, this is it:

i mount an image and it 3 GB lower, then original drive, but it is OK. But image itself has no partitioning and testdisk can't see the image (used mdconfig command)

so i tried to set the same partitioning as the original drive with gpart create-add. Pool still don't want to mount.

I run restoration program on the image and found ZFS partition with same initial offset:

but with that program I can restore only files itself, without the folders tree (and there are tons of them).

When i try to mount the pool, it shows me that:

And the pool state is UNAVAL.

What can i do to try restore folder tree of that disk?

partitioning of md0:

Thanks in advance again and i swear to use mirroring futher!