We are many here to ask questions. But few tell what they want to achieve.

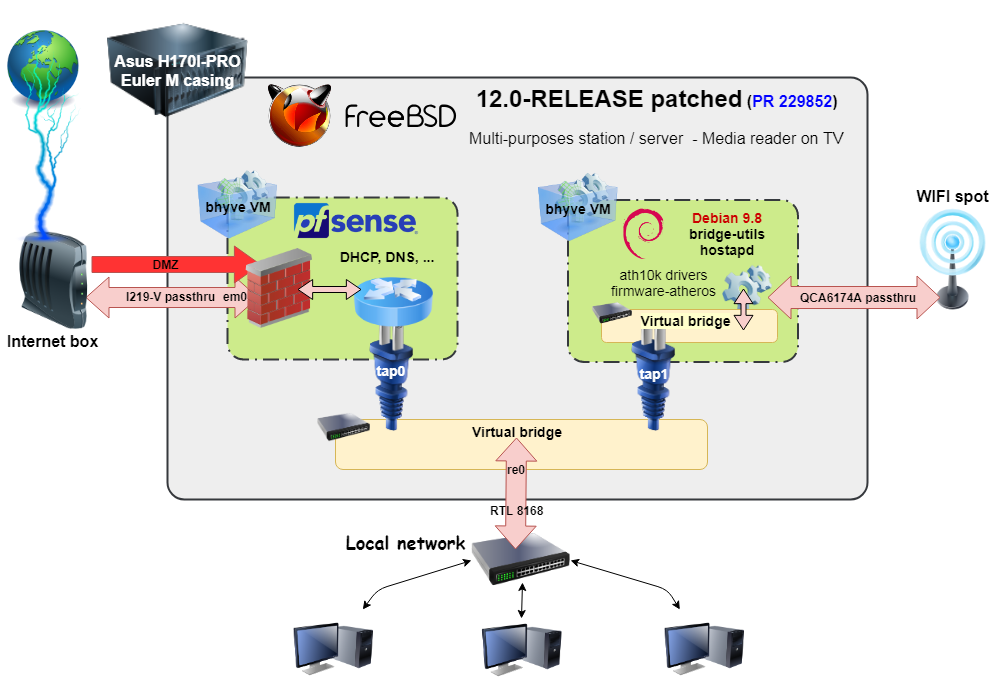

I want to share this project involving FreeBSD. My goal was to set up a firewall / router just after my internet box and also use it as a media reader on my TV.

I almost completed the configuration. The main components are operational and under testing for the moment.

The hardware part is composed of a 0 decibel PC including the Asus H170I-PRO motherboard, an i3 7300T and 8 GiB of ram. The machine has to be as silent as possible because it lies near the TV and must be always running. I choose this motherboard because it has two wired network ports and a wifi device. I added two 250 GB ssd for system redundancy (zfs mirror).

You have an example of such a PC on this site: https://www.pcvert.fr/15-20-watts/444-euler-m-h170.html

The main problem is that FreeBSD doesn't have a functioning driver for the wifi device (QCA6174). One guy is working on porting the linux drivers to FreeBSD but currently it doesn't work with QCA6174 (it works on QCA988X): https://github.com/erikarn/athp

So I used a Debian VM to bring the wifi network to the box. The firewall / router part is powered by a pfSense VM (based on FreeBSD 11.2). Here, I have to passthru two network devices to these VM. For an Intel processor, you need the patch you can find in PR 229852. Without this one, the kernel crashes as soon as you run a bhyve VM with a passthru device.

Finally, the harder part is the multimedia one. I'm facing to some minor issues I didn't solve so far.

I want to share this project involving FreeBSD. My goal was to set up a firewall / router just after my internet box and also use it as a media reader on my TV.

I almost completed the configuration. The main components are operational and under testing for the moment.

The hardware part is composed of a 0 decibel PC including the Asus H170I-PRO motherboard, an i3 7300T and 8 GiB of ram. The machine has to be as silent as possible because it lies near the TV and must be always running. I choose this motherboard because it has two wired network ports and a wifi device. I added two 250 GB ssd for system redundancy (zfs mirror).

You have an example of such a PC on this site: https://www.pcvert.fr/15-20-watts/444-euler-m-h170.html

The main problem is that FreeBSD doesn't have a functioning driver for the wifi device (QCA6174). One guy is working on porting the linux drivers to FreeBSD but currently it doesn't work with QCA6174 (it works on QCA988X): https://github.com/erikarn/athp

So I used a Debian VM to bring the wifi network to the box. The firewall / router part is powered by a pfSense VM (based on FreeBSD 11.2). Here, I have to passthru two network devices to these VM. For an Intel processor, you need the patch you can find in PR 229852. Without this one, the kernel crashes as soon as you run a bhyve VM with a passthru device.

Finally, the harder part is the multimedia one. I'm facing to some minor issues I didn't solve so far.

Last edited: