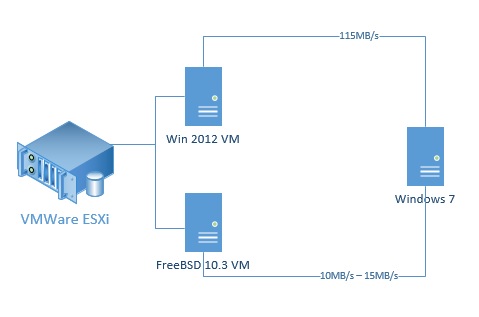

I am experiencing slow network performance in FreeBSD 10.3 r297264 running as a VM on ESXi6.0

just to rule hardware out i set up a VM of a windows server on the same ESXi server and assigned identical hardware resources as FreeBSD has.

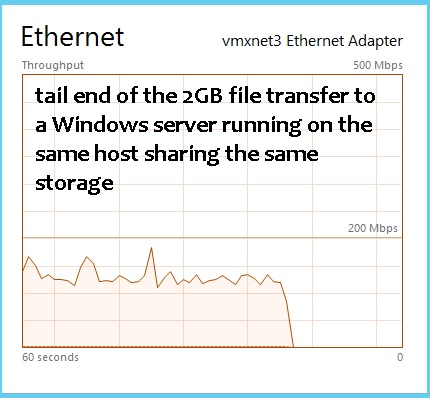

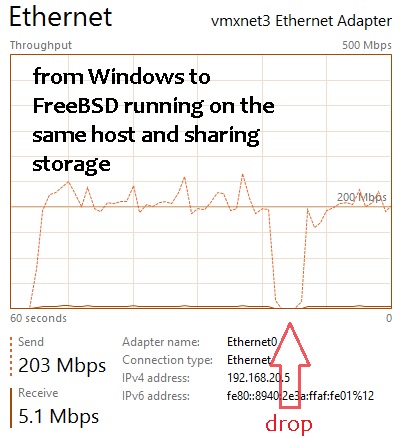

If I attempt to copy a test 2GB file over the network (SMB) from another physical machine on the same network to FreeBSD VM I am getting speeds of 20MB/s - 35MB/s with several drops to 0MB/s for 13 to 20 seconds during the transfer.

copying the same 2GB file to a windows server VM averages speeds of 115MB/s with 0 drops.

slow network performance in FreeBSD can be observed with VMXNET3 and E1000 nics.

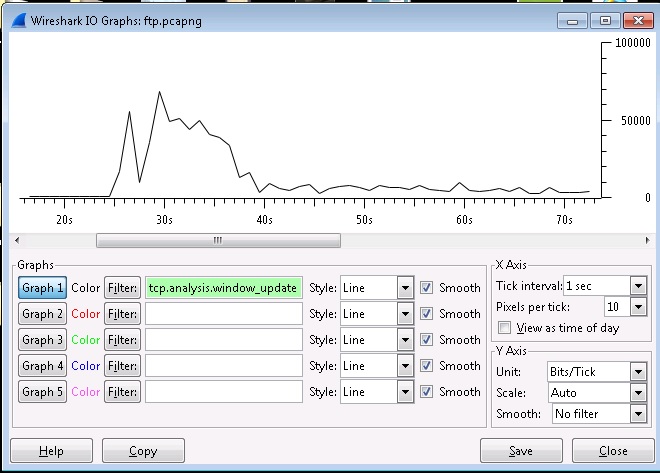

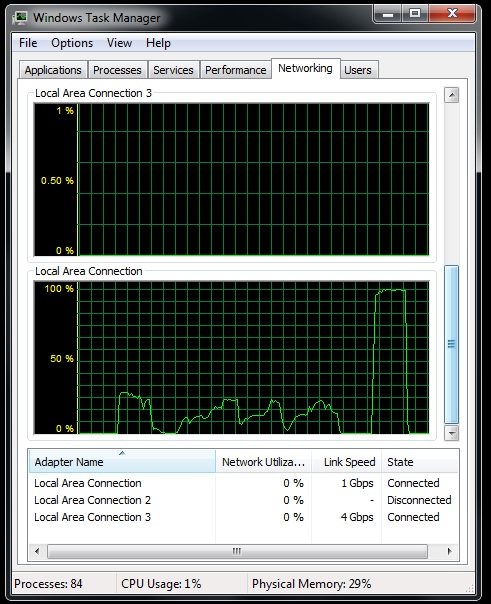

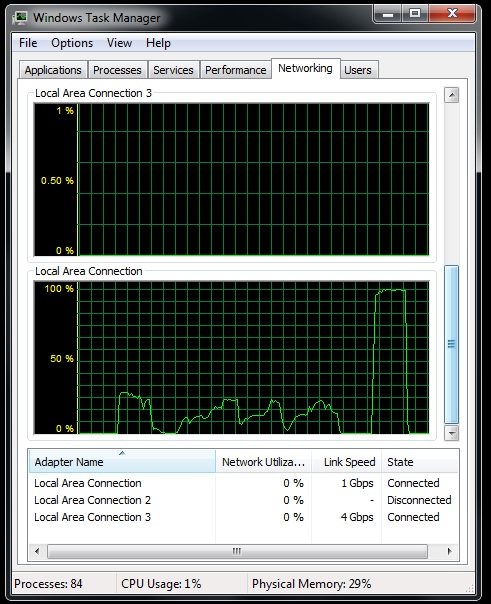

here is a graph of the network utilization from the computer hosting the 2GB file.

1st portion of the graph is the copy to FreeBSD 2nd portion is a copy to windows server.

What config changes could be done to FreeBSD to optimize network performance?

Thanks

just to rule hardware out i set up a VM of a windows server on the same ESXi server and assigned identical hardware resources as FreeBSD has.

If I attempt to copy a test 2GB file over the network (SMB) from another physical machine on the same network to FreeBSD VM I am getting speeds of 20MB/s - 35MB/s with several drops to 0MB/s for 13 to 20 seconds during the transfer.

copying the same 2GB file to a windows server VM averages speeds of 115MB/s with 0 drops.

slow network performance in FreeBSD can be observed with VMXNET3 and E1000 nics.

here is a graph of the network utilization from the computer hosting the 2GB file.

1st portion of the graph is the copy to FreeBSD 2nd portion is a copy to windows server.

What config changes could be done to FreeBSD to optimize network performance?

Thanks