I use ESXI hosts for years and years, but in the last half year I thinking of moving from ESXI. Proxmox is no go dude to the network setups, drops etc., and I really don’t like Proxmox in general. XPC-NG, it’s ok… then we come to Bhyve() and I really want to love Bhyve as it’s on FreeBSD and I running 95% of all my servers with FreeBSD. I’am totalt new on Bhyve, so..

Two questions.

Fist, my test server is a standard HPE G9 DL360, noting special.. 2x2658A v3, 192GB, 460 10gbps card, P440ar 12G SAS card with 8 standard Samsung EVO 870 500GB SSDs and a 1.2TB fusion iodrive2 that’s I have not get it to work yet.

Q1 - Disk speed:

I have the 8 SSDs in a raid 10 configuration (hw on the 440) that I run ZFS on. I need to flash the card to HBA-mode so I can get real ZFS - and I don’t have the SSA. So right know I’m stuck on HW-raid.)

In FreeBSD 13p5 (on the host - not the VM) I get 3.6GB/s with DD.

5368709120 bytes transferred in 1.484915 secs (3615499871 bytes/sec)

I know it’s not the best way to test. I have tried fio, speedtest, phoronix etc. and every test give me totally different numbers, so I just went with DD as that works on all OS.

When I start a VM (FreeBSD, Ubuntu or else) it’s give me maximum 1.1GB/s in transfer speed. Is that normal with that big diff? ESXI do that as well, even more, but my understanding Bhyve should be very near to the real HW?

Q2 - CPU cores

In the vm-config file/shell script you put:

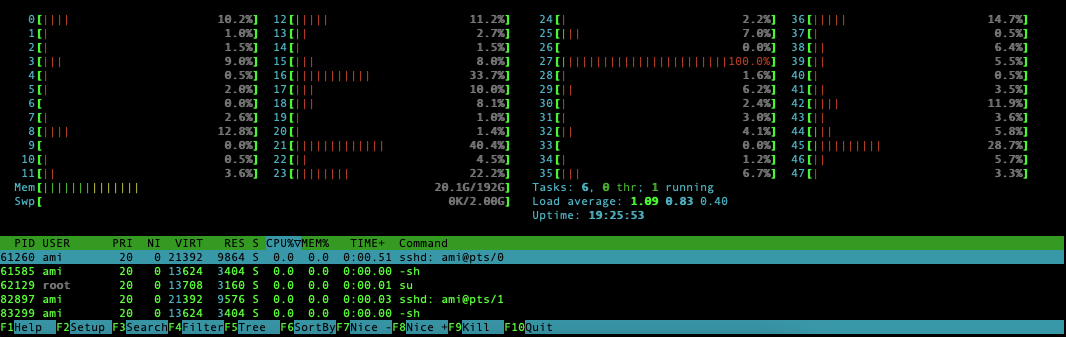

As -c 4 is 4 cores in this VM. But, when I check the load on the host when the VM is running DD, is’t all over the place.

Picture from the FreeBSD host - not the VM.

Is that normal? Why not 4 cores?

I’am running only 1 VM with the DD command above. After DD it’s idling on 0% on all cores.

If I start 4-5-6 VMs under load, well.. that’s a war going on then.

Two questions.

Fist, my test server is a standard HPE G9 DL360, noting special.. 2x2658A v3, 192GB, 460 10gbps card, P440ar 12G SAS card with 8 standard Samsung EVO 870 500GB SSDs and a 1.2TB fusion iodrive2 that’s I have not get it to work yet.

Q1 - Disk speed:

I have the 8 SSDs in a raid 10 configuration (hw on the 440) that I run ZFS on. I need to flash the card to HBA-mode so I can get real ZFS - and I don’t have the SSA. So right know I’m stuck on HW-raid.)

In FreeBSD 13p5 (on the host - not the VM) I get 3.6GB/s with DD.

dd if=/dev/zero of=/root/tempfile bs=5M count=1024; sync5368709120 bytes transferred in 1.484915 secs (3615499871 bytes/sec)

I know it’s not the best way to test. I have tried fio, speedtest, phoronix etc. and every test give me totally different numbers, so I just went with DD as that works on all OS.

When I start a VM (FreeBSD, Ubuntu or else) it’s give me maximum 1.1GB/s in transfer speed. Is that normal with that big diff? ESXI do that as well, even more, but my understanding Bhyve should be very near to the real HW?

Q2 - CPU cores

In the vm-config file/shell script you put:

Code:

bhyve -c 4 -m 8G …Picture from the FreeBSD host - not the VM.

Is that normal? Why not 4 cores?

I’am running only 1 VM with the DD command above. After DD it’s idling on 0% on all cores.

If I start 4-5-6 VMs under load, well.. that’s a war going on then.