Hi!

Who can tell how to increase the disk space allocated under /usr/home?

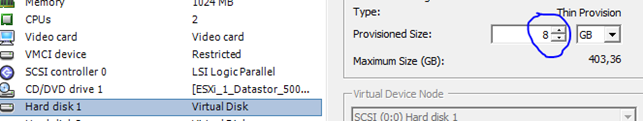

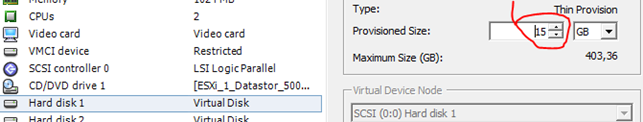

Increase disk space up to 15 G (+7G)

Gpart does not see the added disk space

Is it possible to increase the size /usr/home without rebooting the server?

Who can tell how to increase the disk space allocated under /usr/home?

Code:

# freebsd-version

11.0-RELEASE-p8

Code:

# cat /etc/rc.conf | grep zfs

zfs_enable="YES"

Code:

# cat /etc/rc.conf | grep vmw

vmware_guest_vmblock_enable="NO"

vmware_guest_vmhgfs_enable="NO"

vmware_guest_vmmemctl_enable="NO"

vmware_guest_vmxnet_enable="NO"

vmware_guestd_enable="YES"

Code:

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

zroot 3,10G 4,59G 96K /zroot

zroot/ROOT 954M 4,59G 96K none

zroot/ROOT/default 954M 4,59G 954M /

zroot/tmp 144K 4,59G 144K /tmp

zroot/usr 2,16G 4,59G 96K /usr

[B]zroot/usr/home 136K 4,59G 136K /usr/home[/B]

zroot/usr/ports 976M 4,59G 976M /usr/ports

zroot/usr/src 1,21G 4,59G 1,21G /usr/src

zroot/var 652K 4,59G 96K /var

zroot/var/audit 96K 4,59G 96K /var/audit

zroot/var/crash 96K 4,59G 96K /var/crash

zroot/var/log 172K 4,59G 172K /var/log

zroot/var/mail 96K 4,59G 96K /var/mail

zroot/var/tmp 96K 4,59G 96K /var/tmp

root@gitlab ~ #

Code:

~ # gpart show

=> 40 16777136 da0 GPT (8.0G)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 16773120 2 freebsd-zfs (8.0G)

16775168 2008 - free - (1.0M)

=> 40 4194224 da1 GPT (2.0G)

40 4194224 1 freebsd-swap (2.0G)

Code:

# gpart list

Geom name: da0

modified: false

state: OK

fwheads: 255

fwsectors: 63

last: 16777175

first: 40

entries: 152

scheme: GPT

Providers:

1. Name: da0p1

Mediasize: 524288 (512K)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 20480

Mode: r0w0e0

rawuuid: eb5f5a84-1ad0-11e7-a0d8-000c29945625

rawtype: 83bd6b9d-7f41-11dc-be0b-001560b84f0f

label: gptboot0

length: 524288

offset: 20480

type: freebsd-boot

index: 1

end: 1063

start: 40

2. Name: da0p2

Mediasize: 8587837440 (8.0G)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 1048576

Mode: r1w1e1

rawuuid: eb680ce3-1ad0-11e7-a0d8-000c29945625

rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b

label: zfs0

length: 8587837440

offset: 1048576

type: freebsd-zfs

index: 2

end: 16775167

start: 2048

Consumers:

1. Name: da0

Mediasize: 8589934592 (8.0G)

Sectorsize: 512

Mode: r1w1e2

Geom name: da1

modified: false

state: OK

fwheads: 255

fwsectors: 63

last: 4194263

first: 40

entries: 152

scheme: GPT

Providers:

1. Name: da1p1

Mediasize: 2147442688 (2.0G)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 20480

Mode: r1w1e0

rawuuid: f0da7df4-1ad3-11e7-b648-000c29945625

rawtype: 516e7cb5-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 2147442688

offset: 20480

type: freebsd-swap

index: 1

end: 4194263

start: 40

Consumers:

1. Name: da1

Mediasize: 2147483648 (2.0G)

Sectorsize: 512

Mode: r1w1e1

Code:

# zpool status

pool: zroot

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

zroot ONLINE 0 0 0

da0p2 ONLINE 0 0 0

errors: No known data errors

Code:

# zpool list

NAME SIZE ALLOC FREE EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

zroot 7,94G 3,01G 4,93G - 24% 37% 1.00x ONLINE -

Code:

# zpool get autoexpand

NAME PROPERTY VALUE SOURCE

zroot autoexpand on localIncrease disk space up to 15 G (+7G)

Gpart does not see the added disk space

Code:

# gpart show

=> 40 16777136 da0 GPT (8.0G)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 16773120 2 freebsd-zfs (8.0G)

16775168 2008 - free - (1.0M)

=> 40 4194224 da1 GPT (2.0G)

40 4194224 1 freebsd-swap (2.0G)Is it possible to increase the size /usr/home without rebooting the server?