Hi,

I have a bunch of FreeBSD servers in virtual machines back home (Europe), and I am half a world away (Asia) with a Windows 7 laptop. I need to transfer large files from the server to the client, over TCP (mainly HTTPS via nginx and HTTP/2).

Server (FreeBSD; Europe) and client (Win 7; Asia) are both on 100 Mbps connections. RTT is 390 ms (+/- 60 ms).

I'd like to tune the FreeBSD TCP settings for higher throughput when transferring files from the server to the client.

As a start I've tried two different congestion control algorithms, H-TCP and CUBIC. With both of these I seem to run into a fundamental problem where the speed of the transfer will sometimes drop to a trickle, and for long periods of time. I suspect it is triggered if the client reports a substantial amount of packets lost or out of order.

It happens sporadically, and quite frequently, with both H-TCP and CUBIC. I believe I've seen it also with the default congestion control algorithm newreno, however much more seldom, and it takes much much longer, as it is substantially slower to ramp up the speed.

During the slow periods, it can drop to a mere trickle, of no more than 1-5 packets per RTT period. Transfers appear mostly stalled, but from packet sniffing I verify that data is being (re-)transmitted, just very very slowly. These slow transfer speed events can last from a few seconds up to several minutes. What I see on the wire (using tcpdump on the server side) is that the client reports a flood of TCP Dup Ack, which triggers retransmissions. I assume that when the line is heavily used, sometimes a large amount of packets are lost. Such a burst of packet loss sometimes result in the server slowing down its sending of TCP packets to only sending retransmissions, and only from explicit ACKs. E.g. 4 ACKs in = 4 retransmitted packets out.

sysctl settings different from default, with H-TCP active:

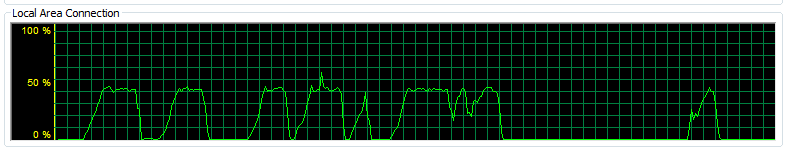

Here's what the traffic pattern looks like with H-TCP active, transferring a 2+ GB file:

You can clearly see the frequent drops in throughput down to 0, and especially the long periods of next to no data toward the end.

Are there better options for TCP congestion control algorithms than H-TCP or CUBIC for "long fat pipes" (high bandwidth, high latency, generally low but sporadic packet loss)?

Are there additional tunable parameters which can alleviate these symptoms of trickling retransmissions on high latency links?

Or perhaps better yet, something relatively simple for pushing data over UDP. Using iperf I've managed 65-70 Mbps with very low packet loss (2-5%).

I have a bunch of FreeBSD servers in virtual machines back home (Europe), and I am half a world away (Asia) with a Windows 7 laptop. I need to transfer large files from the server to the client, over TCP (mainly HTTPS via nginx and HTTP/2).

Server (FreeBSD; Europe) and client (Win 7; Asia) are both on 100 Mbps connections. RTT is 390 ms (+/- 60 ms).

I'd like to tune the FreeBSD TCP settings for higher throughput when transferring files from the server to the client.

As a start I've tried two different congestion control algorithms, H-TCP and CUBIC. With both of these I seem to run into a fundamental problem where the speed of the transfer will sometimes drop to a trickle, and for long periods of time. I suspect it is triggered if the client reports a substantial amount of packets lost or out of order.

It happens sporadically, and quite frequently, with both H-TCP and CUBIC. I believe I've seen it also with the default congestion control algorithm newreno, however much more seldom, and it takes much much longer, as it is substantially slower to ramp up the speed.

During the slow periods, it can drop to a mere trickle, of no more than 1-5 packets per RTT period. Transfers appear mostly stalled, but from packet sniffing I verify that data is being (re-)transmitted, just very very slowly. These slow transfer speed events can last from a few seconds up to several minutes. What I see on the wire (using tcpdump on the server side) is that the client reports a flood of TCP Dup Ack, which triggers retransmissions. I assume that when the line is heavily used, sometimes a large amount of packets are lost. Such a burst of packet loss sometimes result in the server slowing down its sending of TCP packets to only sending retransmissions, and only from explicit ACKs. E.g. 4 ACKs in = 4 retransmitted packets out.

sysctl settings different from default, with H-TCP active:

net.inet.tcp.cc.htcp.rtt_scaling: 1

net.inet.tcp.cc.htcp.adaptive_backoff: 1

net.inet.tcp.cc.algorithm: htcpHere's what the traffic pattern looks like with H-TCP active, transferring a 2+ GB file:

You can clearly see the frequent drops in throughput down to 0, and especially the long periods of next to no data toward the end.

Are there better options for TCP congestion control algorithms than H-TCP or CUBIC for "long fat pipes" (high bandwidth, high latency, generally low but sporadic packet loss)?

Are there additional tunable parameters which can alleviate these symptoms of trickling retransmissions on high latency links?

Or perhaps better yet, something relatively simple for pushing data over UDP. Using iperf I've managed 65-70 Mbps with very low packet loss (2-5%).