My automated installer configures 3 sas drives in a zfs mirror. 2 drives are active the other is a spare.

There is also a nvme drive which I set up as swap so I can see the result of any kernel panics.

I've testing this in a vm over and over with no issues.

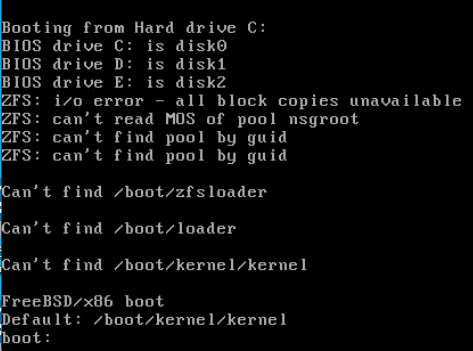

On first boot on the real hardware I am presented with:

I then booted up the install cd and went into the shell.

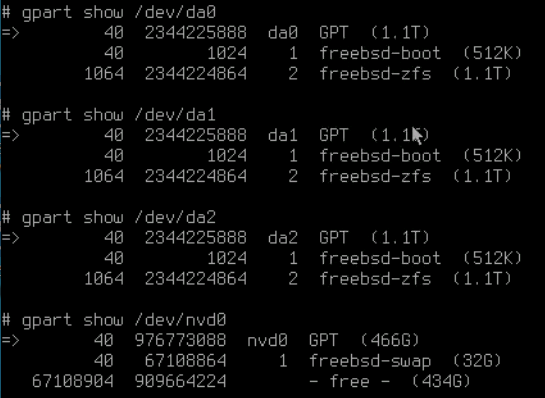

I can see all the gpart info

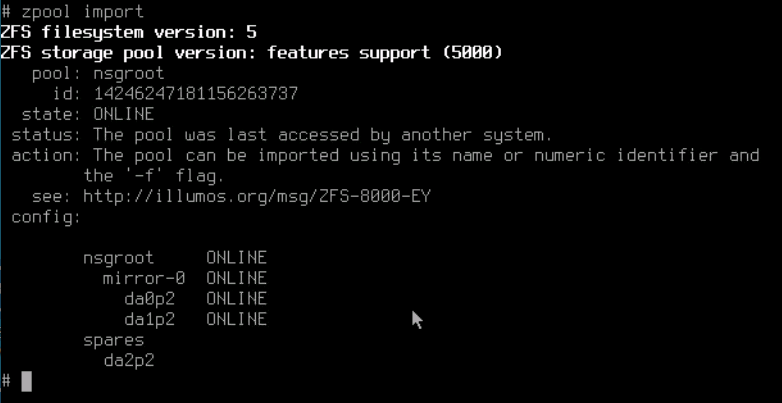

And I can see the pool.

I imported the pool, removed the spare and added it as another drive in the mirror.

I also rewrote the bootcode to all 3 sas drives and rebooted.

Sadly I'm still getting the same error.

I have been doing the same thing on the same server but with only 2 sas drives in a zfs mirror. The nvme drive is present but not configured. Boots fine.

The system is a DELL PowerEdge R440 with a HBA330 controller.

There is also a nvme drive which I set up as swap so I can see the result of any kernel panics.

I've testing this in a vm over and over with no issues.

On first boot on the real hardware I am presented with:

I then booted up the install cd and went into the shell.

I can see all the gpart info

And I can see the pool.

I imported the pool, removed the spare and added it as another drive in the mirror.

I also rewrote the bootcode to all 3 sas drives and rebooted.

Sadly I'm still getting the same error.

I have been doing the same thing on the same server but with only 2 sas drives in a zfs mirror. The nvme drive is present but not configured. Boots fine.

The system is a DELL PowerEdge R440 with a HBA330 controller.