Usually I communicate my freebsd-related work on the mailing lists and bug trackers, but I've just registered to the forum as well.

I'd like to bring to your attention a similar effort I began over a year ago.

Now the situation that we have both worked on this and not shared efforts so far is entirely my fault; however I hope we can combine the efforts for the best way forward.

Version 0.1 of freebsd-gpu-headless was two parts:

- A slave port of nvidia-driver, called nvidia-headless-driver, with no changes other than file paths fixed up to avoid breaking Mesa GLX.

- A set of scripts, called nvidia-headless-utils, with nvidia-xconfig wrapper to ease configuration, and virtualgl wrapper to enable rendering to integrated GPU display.

Support was only basic, and required a bit of manual configuration. I never got this version polished well enough to submit it into FreeBSD, but maybe it would have been good enough. I did a very poor job of publicizing the work, its only mention is at

https://lists.freebsd.org/pipermail/freebsd-x11/2018-March/020675.html

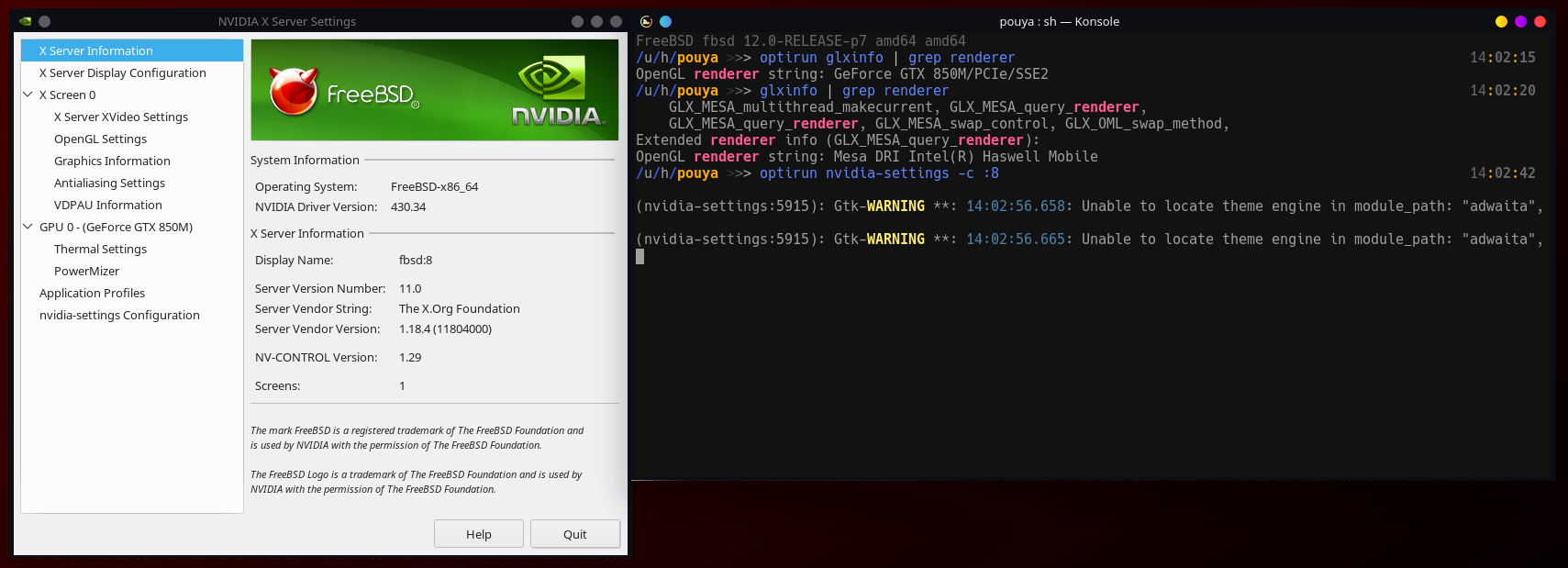

Since then (for the last year) I have had a "version 0.2" as local changes to my installation, with working nvidia-xconfig, ondemand power switching with acpi_call, and Linux support (both 32- and 64-bit). Unfortunately I did not have sufficient time and motivation to give this the attention it deserved towards making it official.

Realizing, as I did this last weekend, that others are moving ahead on this and potentially duplicating this effort, I've taken the time to wrap this up and publish a version 0.3. It is essentially ready to be submitted for inclusion in Ports, pending your feedback.

OpenGL on headless Nvidia GPU on FreeBSD. Contribute to therontarigo/freebsd-gpu-headless development by creating an account on GitHub.

github.com

Some parts I have had working are not quite ready, including the Linux support and power switching. The rest is there, including the rc.d script for running Nvidia in the background. In fact keeping Xorg attached achieves the majority of the power savings; as it prevents the GPU from spinning and wasting power. The consumption difference in my Dell XPS between running with Xorg idle vs. disabling the device by ACPI is only ~0.5 Watt whereas leaving it in its power-on state, kernel module loaded or not, with no Xorg, wastes about 5 Watts!

Other things I have addressed:

- User expects nvidia-xconfig to just work. This is accomplished with a very minor change to that port.

- Display number used for Nvidia is configurable, and may be overridden in environment.

- Two ports for two use-cases:

- nvidia-headless-utils: For using Nvidia as a compute resource on a headless server.

- nvidia-hybrid-graphics: For notebook computers with iGPU+dGPU ("Optimus")

- nvidia-headless-driver is just a minimal slave port. The changes to nvidia-driver needed are also minimal and noninvasive. This is very important for the future maintainability.

Since you have gotten this far with your "optimus" port, I gather that you have a good understanding of what is needed, and might have the time to help integrate what I have here. I strongly recommend adopting the approach of breaking it down into small understandable components with the discrete purposes, rather than as a single port for all. I agree with shkhln that having the changes to a single Makefile is hard to follow (and it is all in there alongside the nvidia-driver internals).

I've avoided calling mine "Optimus" (although I do include that name in the description so it can be searched) since, as others have noted, Optimus is the proprietary switching software, something that will never be on FreeBSD unless provided by Nvidia themselves. "Hybrid" seems an acceptable genericized name.

So far, nvidia-hybrid-graphics depends on VirtualGL because currently that is the only working solution.

Real PRIME support would be great!