Hello.

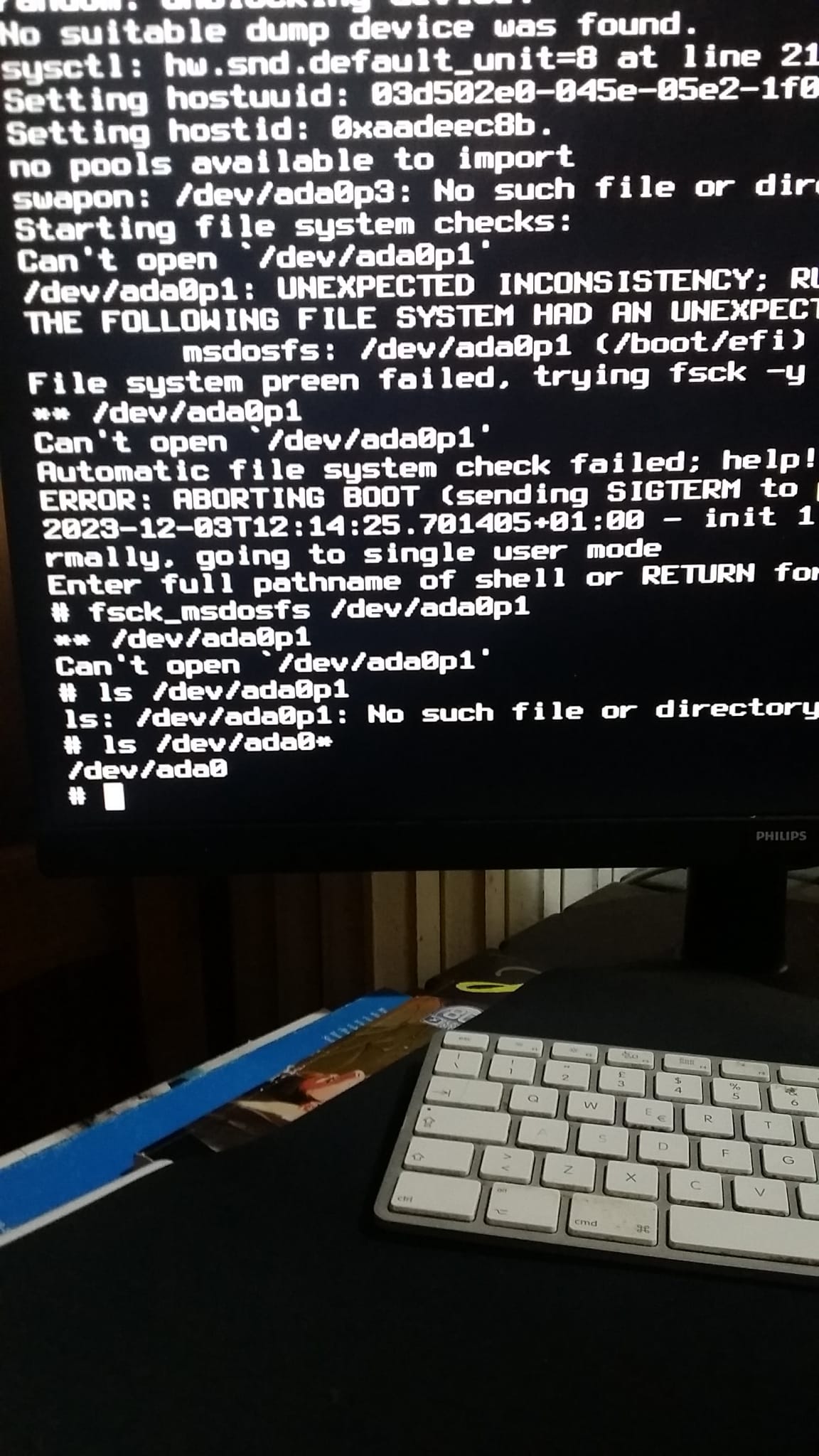

I'm not able to boot my primary FreeBSD installation anymore :

It is stored on this disk :

I went on Linux to check what could have happened to the ZFS / zpool structure :

my /boot/loader.conf :

I have also tried to uncomment these lines :

but I've got the same problem. I don't understand what could be the problem.

I'm not able to boot my primary FreeBSD installation anymore :

It is stored on this disk :

Code:

Disk /dev/sda: 465.76 GiB, 500107862016 bytes, 976773168 sectors

Disk model: CT500MX500SSD4

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: C4E17451-AE72-11EC-9419-E0D55EE21F22

Device Start End Sectors Size Type

/dev/sda1 40 532519 532480 260M EFI System

/dev/sda2 532520 533543 1024 512K FreeBSD boot

/dev/sda3 534528 4728831 4194304 2G FreeBSD swap

/dev/sda4 4728832 976773119 972044288 463.5G FreeBSD ZFSI went on Linux to check what could have happened to the ZFS / zpool structure :

Code:

# zpool import -f -R /mnt/zroot zroot

# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

zroot 460G 425G 35.1G - - 55% 92% 1.00x ONLINE /mnt/zroot

# ls

_13.2_CURRENT_ boot build-xen dev kernels lib-backup mnt proc sbin tmp vms

bhyve boot-bo compat etc lib libexec net rescue share usr _ZFS_

bin build data home lib64 media opt root sys var zroot

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

zroot 425G 20.8G 96K /mnt/zroot/

zroot

zroot/ROOT 345G 20.8G 96K none

zroot/ROOT/13.1-RELEASE-p5_2023-01-12_235731 268M 20.8G 136G /mnt/zroot

zroot/ROOT/13.1-RELEASE-p5_2023-01-20_181957 344G 20.8G 292G /mnt/zroot

zroot/ROOT/13.1-RELEASE-p5_2023-01-20_181957@2023-01-20-18:19:57-0 52.1G - 140G -

zroot/tmp 198M 20.8G 198M /mnt/zroot/

tmp

zroot/usr 77.6G 20.8G 120K /mnt/zroot/

usr

zroot/usr/home 63.4G 20.8G 63.4G /mnt/zroot/

usr/home

zroot/usr/ports 14.2G 20.8G 14.2G /mnt/zroot/

usr/ports

zroot/usr/src-old 96K 20.8G 96K /mnt/zroot/

usr/src-old

zroot/var 2.46G 20.8G 136K /mnt/zroot/

var

zroot/var/audit 96K 20.8G 96K /mnt/zroot/

var/audit

zroot/var/crash 1.11G 20.8G 1.11G /mnt/zroot/

var/crash

zroot/var/log 4.75M 20.8G 4.75M /mnt/zroot/

var/log

zroot/var/mail 1.33G 20.8G 1.33G /mnt/zroot/

var/mail

zroot/var/tmp 18.1M 20.8G 18.1M /mnt/zroot/

var/tmpmy /boot/loader.conf :

Code:

#currdev="zfs:zroot/ROOT/13.1-RELEASE-p5_2023-01-20_181957"

#vfs.root.mountfrom="zroot/ROOT/13.1-RELEASE-p5_2023-01-20_181957"

#currdev="zfs:zroot/ROOT/13.1-RELEASE-p5_2023-01-12_235731"

#opensolaris_load="YES"

#loaddev="disk2p1:"

loader_logo="daemon"

vmm_load="YES"

nmdm_load="YES"

if_tap_load="YES"

if_bridge_load="YES"

bridgestp_load="YES"

fusefs_load="YES"

tmpfs_load="YES"

verbose_loading="YES"

pptdevs="2/0/0 2/0/1 2/0/2 2/0/3"

kern.geom.label.ufsid.enable="1"

cryptodev_load="YES"

zfs_load="YES"

kern.racct.enable="1"

aio_load="YES"

vboxdrv_load="YES"

kern.cam.scsi_delay="10000"

fdescfs_load="YES"

linprocfs_load="YES"

linsysfs_load="YES"I have also tried to uncomment these lines :

Code:

currdev="zfs:zroot/ROOT/13.1-RELEASE-p5_2023-01-20_181957"

or

currdev="zfs:zroot/ROOT/13.1-RELEASE-p5_2023-01-12_235731"

and / or

loaddev="disk2p1:"but I've got the same problem. I don't understand what could be the problem.