First, contact SuperMicro. Not because you want to beat them up, not because you want to harass them, not even because you want a refund. But because you want to help them fix this problem. I'm sure their engineering department is very interested in this. They are located in the San Francisco bay area, so that might be a sensible time zone for you. I've dealt with their sales and engineering folks a few times, and they are really friendly and competent.

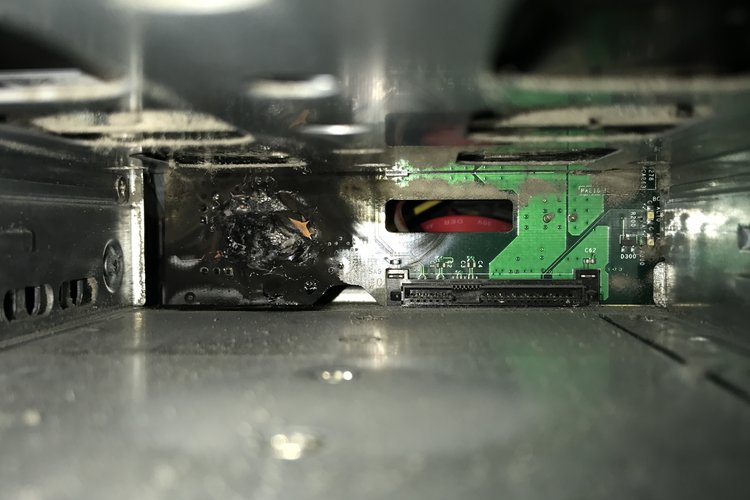

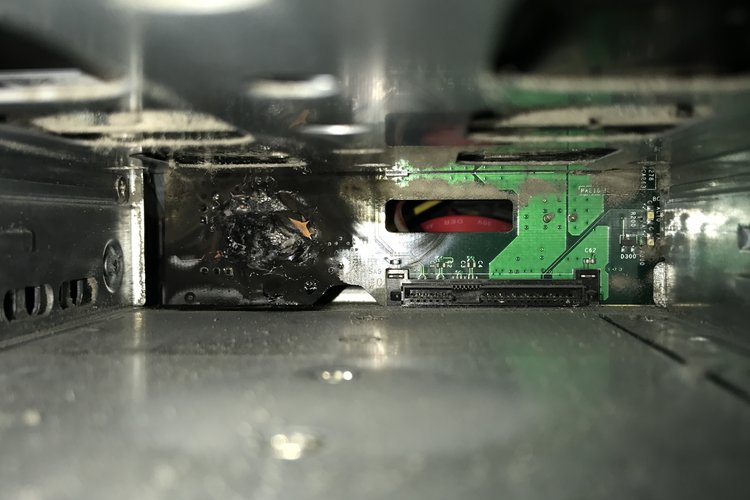

My theory would be a short in the power traces of the backplane, inside the PC board. Given that PC power supplies can easily make 1/2 or 3/4 kiloWatt (and run at currents that are awfully close to what an arc-welder uses), this is not surprising.

But I've never seen this kind of backplane damage caused by the backplane itself. I've seen a 60-disk enclosure (name withheld, don't want to hurt anyone's feelings) where one power supply caught fire. Fortunately, no disk drives were hurt (the power crowbar circuit worked), and the data was fine afterwards. According to the local service person, the smoke and fire damage was spectacular.

Getting back to your original question: Copying the raw drives into files, making loop devices, and then trying to import those into ZFS *might* work. It might also fail rather badly. You might want to ask on the kernel developer mailing list, perhaps there is a ZFS or VFS developer there who can answer that question. Here is some explanation why it might fail: If you do that, you end up going through ZFS twice for a single IO request: User space IO request goes into ZFS (through the VFS layer at the kernel's top interface), gets decoded into disk sectors and blocks, and goes to the low-level block device drivers. Since that is a loop device driver, it goes back into VFS layer as a file system request, and then back into ZFS, and finally to a real block device. But by this point, if there are any data structures that are *per IO request* in either the VFS layer or in ZFS itself, they have been used twice. The most popular example for such data structures are locks (which protect data structure): Doing something like this has the potential for deadlocking (I've learned this the hard way).

If your suggestion doesn't work, my first suggestion would be a hardware hack. You still have functioning HBAs or SATA controllers; maybe you can quickly borrow enough adapter cables (octopus cables) and power splitters to run all disks. Disks work fine sitting on a work bench or office table. The second suggestion: To prevent having to make two trips through the *same* file system, put a network in between: Make the loopback devices on the second node, make them into iSCSI targets (using the iSCSI server whose name I can't remember), then on the original node set up the iSCSI initiator (a.k.a. client), and mount them this way. Will run slower (extra trip across the network), but higher probability of success.