Hi everyone, I just registered on the forum to ask for help with my FreeBSD NAS.

Hardware is a HP Proliant gen8 microserver, with two 3TB WD red drives.

System is FreeBSD 12.0-RELEASE-p4, with default kernel.

gpart show output:

zpool list output:

I have configured periodic scrubs on the two pools starting every Tuesday morning, in a moment of no activity on the NAS. Average scrub will take around 2-4 minutes for zroot and 3-4 hours for zstore. Everything has worked fine for almost a couple years since first install.

Beginning some time after the upgrade to 12.0, every now and then (not necessarily the first time it is executed) a scrub will "fail", that is, it will perform at extremely slow speeds and almost completely hang the system while running.

For example, SSH access via puTTY will take around 20-30 seconds between entering the password and having a prompt, or accessing the samba share from a windows machine will take 2-3 minutes.

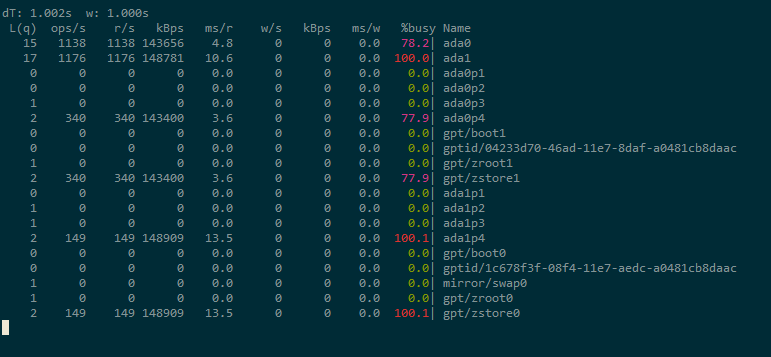

gstat during these scrubs will look like this:

When this happens, stopping the scrub will instantly make the system responsive again, and for example will let me transfer a file via smb at full gigabit network speed, both from and to the NAS. Rebooting and restarting the scrub will make it go at the "normal" speed.

I honestly do not have the minimum knowledge of FreeBSD and ZFS required to investigate further on the topic, and would like to know how to possibly rule out a hardware issue (maybe with the controller, or the cable). I tried switching the disks to the unused bays but to no avail. SMART info looks fine.

Any help would be greatly appreciated. Thanks!

Hardware is a HP Proliant gen8 microserver, with two 3TB WD red drives.

System is FreeBSD 12.0-RELEASE-p4, with default kernel.

gpart show output:

Code:

gpart show

=> 40 5860533088 ada0 GPT (2.7T)

40 2008 - free - (1.0M)

2048 1024 1 freebsd-boot (512K)

3072 1024 - free - (512K)

4096 4194304 2 freebsd-swap (2.0G)

4198400 31457280 3 freebsd-zfs (15G)

35655680 5824671744 4 freebsd-zfs (2.7T)

5860327424 205704 - free - (100M)

=> 40 5860533088 ada1 GPT (2.7T)

40 2008 - free - (1.0M)

2048 1024 1 freebsd-boot (512K)

3072 1024 - free - (512K)

4096 4194304 2 freebsd-swap (2.0G)

4198400 31457280 3 freebsd-zfs (15G)

35655680 5824671744 4 freebsd-zfs (2.7T)

5860327424 205704 - free - (100M)zpool list output:

Code:

zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

zroot 14.9G 2.01G 12.9G - - 29% 13% 1.00x ONLINE -

zstore 2.70T 1.33T 1.38T - - 18% 49% 1.00x ONLINE -I have configured periodic scrubs on the two pools starting every Tuesday morning, in a moment of no activity on the NAS. Average scrub will take around 2-4 minutes for zroot and 3-4 hours for zstore. Everything has worked fine for almost a couple years since first install.

Beginning some time after the upgrade to 12.0, every now and then (not necessarily the first time it is executed) a scrub will "fail", that is, it will perform at extremely slow speeds and almost completely hang the system while running.

For example, SSH access via puTTY will take around 20-30 seconds between entering the password and having a prompt, or accessing the samba share from a windows machine will take 2-3 minutes.

gstat during these scrubs will look like this:

When this happens, stopping the scrub will instantly make the system responsive again, and for example will let me transfer a file via smb at full gigabit network speed, both from and to the NAS. Rebooting and restarting the scrub will make it go at the "normal" speed.

I honestly do not have the minimum knowledge of FreeBSD and ZFS required to investigate further on the topic, and would like to know how to possibly rule out a hardware issue (maybe with the controller, or the cable). I tried switching the disks to the unused bays but to no avail. SMART info looks fine.

Any help would be greatly appreciated. Thanks!