I made a mistake that I didn't read the release note before I upgrade to FreeBSD.

I paste here to remind other people don't make the same mistake.

www.freebsd.org

www.freebsd.org

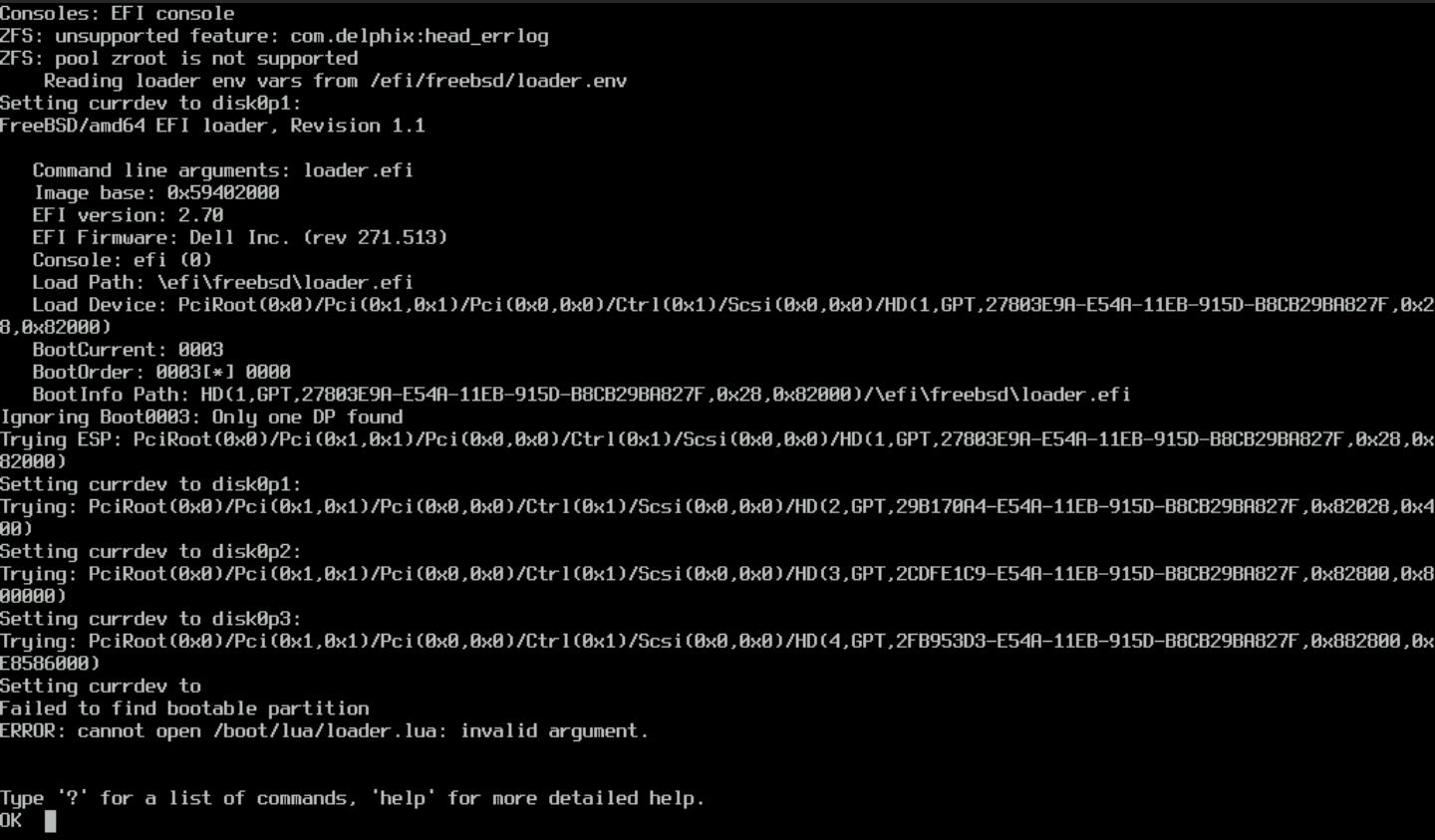

So I updated zpool as usual, and also updated bootcode, and then reboot.

Now I'm not able to find the bootable partition.

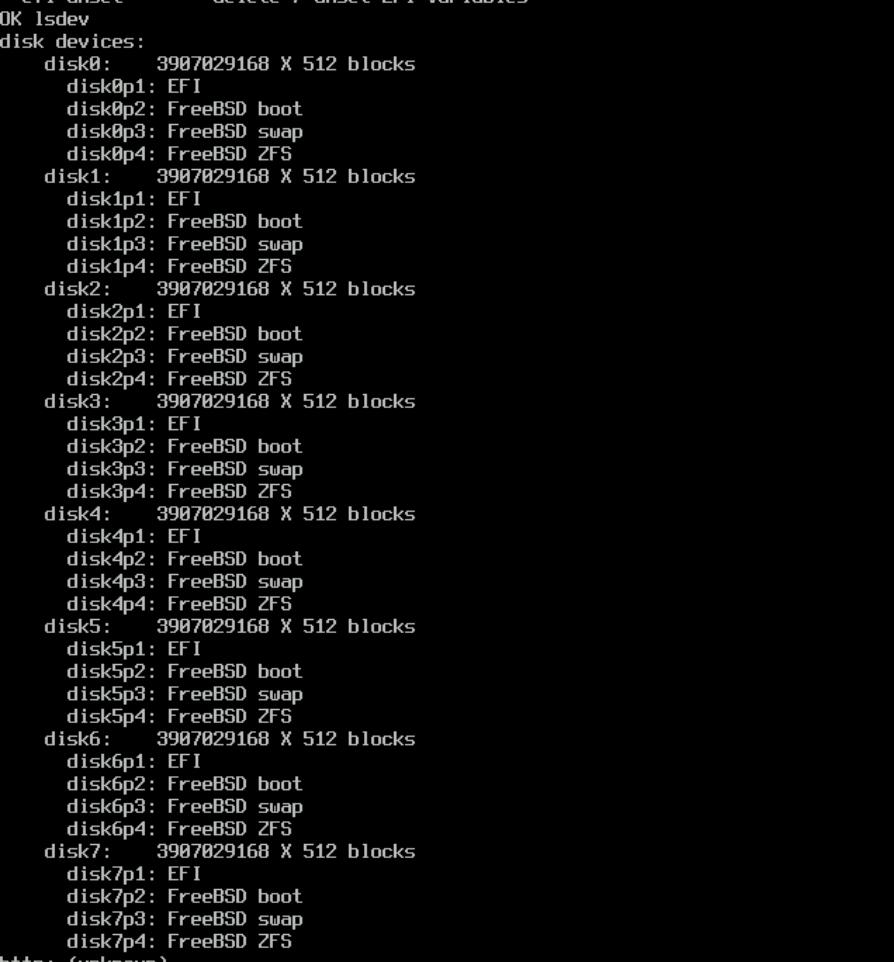

Disk info are as following

As the server has limited bandwidth, it's hard to mount an ISO and boot from ISO.

Is there any way to update ` /efi/freebsd/loader.efi` and boot the system?

Thanks

I paste here to remind other people don't make the same mistake.

FreeBSD 14.0-RELEASE Release Notes

FreeBSD is an operating system used to power modern servers, desktops, and embedded platforms.

Upgrading from Previous Releases of FreeBSD

Binary upgrades between RELEASE versions (and snapshots of the various security branches) are supported using the freebsd-update(8) utility. The binary upgrade procedure will update unmodified userland utilities, as well as unmodified GENERIC kernels distributed as a part of an official FreeBSD release. The freebsd-update(8) utility requires that the host being upgraded have Internet connectivity. Note that freebsd-update cannot be used to roll back to the previous release after updating to a new major version.

Source-based upgrades (those based on recompiling the FreeBSD base system from source code) from previous versions are supported, according to the instructions in /usr/src/UPDATING.

There have been a number of improvements in the boot loaders, and upgrading the boot loader on the boot partition is recommended in most cases, in particular if the system boots via EFI. If the root is on a ZFS file system, updating the boot loader is mandatory if the pool is to be upgraded, and the boot loader update must be done first. Note that ZFS pool upgrades are not recommended for root file systems in most cases, but updating the boot loader can avoid making the system unbootable if the pool is upgraded in the future. The bootstrap update procedure depends on the boot method (EFI or BIOS), and also on the disk partitioning scheme. The next several sections address each in turn.

Notes for systems that boot via EFI, using either binary or source upgrades: There are one or more copies of the boot loader on the MS-DOS EFI System Partition (ESP), used by the firmware to boot the kernel. The location of the boot loader in use can be determined using the command efibootmgr -v. The value displayed for BootCurrent should be the number of the current boot configuration used to boot the system. The corresponding entry of the output should begin with a + sign, such as

+Boot0000* FreeBSD HD(1,GPT,f859c46d-19ee-4e40-8975-3ad1ab00ac09,0x800,0x82000)/File(\EFI\freebsd\loader.efi)

nda0p1:/EFI/freebsd/loader.efi (null)

The ESP may already be mounted on /boot/efi. Otherwise, the partition may be mounted manually, using the partition listed in the efibootmgr output (nda0p1 in this case): mount_msdosfs /dev/nda0p1 /boot/efi. See loader.efi(8) for another example.

The value in the File field in the efibootmgr -v output, \EFI\freebsd\loader.efi in this case, is the MS-DOS name for the boot loader in use on the ESP. If the mount point is /boot/efi, this file will translate to /boot/efi/efi/freebsd/loader.efi. (Case does not matter on MS-DOSFS file sytems; FreeBSD uses lower case.) Another common value for File would be \EFI\boot\bootXXX.efi, where XXX is x64 for amd64, aa64 for aarch64, or riscv64 for riscv64; this is the default bootstrap if none is configured. Both the configured and default boot loaders should be updated by copying from /boot/loader.efi to the correct path in /boot/efi.

So I updated zpool as usual, and also updated bootcode, and then reboot.

Now I'm not able to find the bootable partition.

Disk info are as following

As the server has limited bandwidth, it's hard to mount an ISO and boot from ISO.

Is there any way to update ` /efi/freebsd/loader.efi` and boot the system?

Thanks