What are your thoughts on the browser handling DNS?

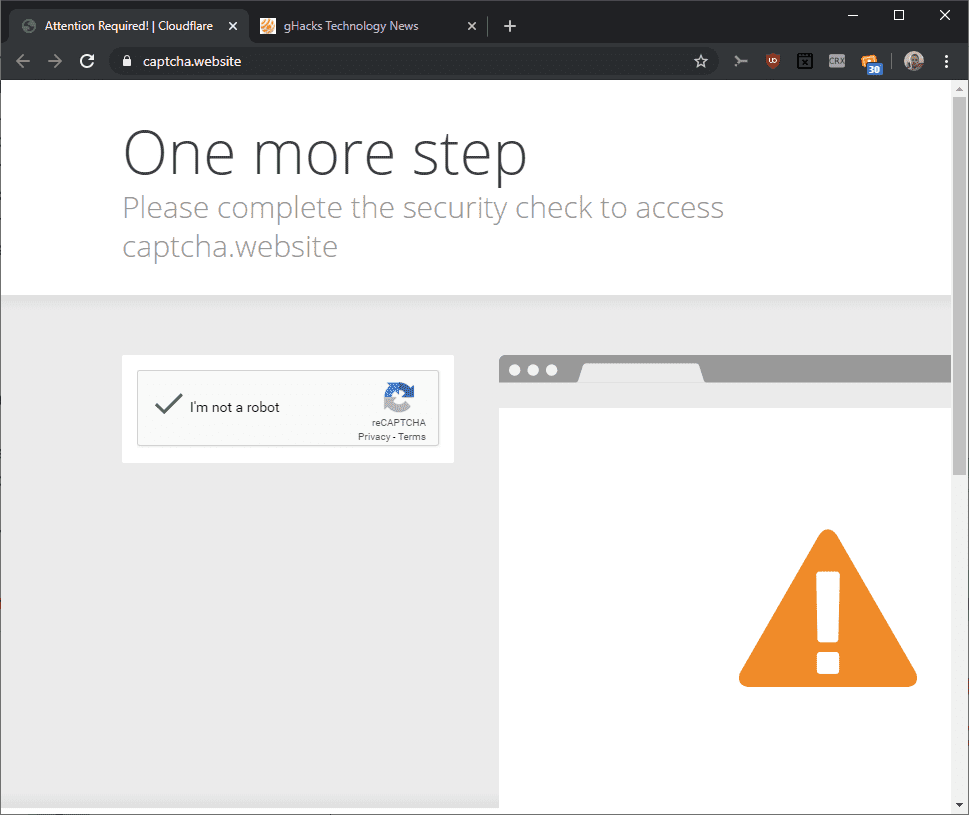

Mozilla using Cloudflare. Google with Chrome.

It is a very very very bad idea.

For starters, indeed, it should be an OS function.

Then, it doesnt solve any problem we have - but it creates a ton of new ones.

Basically, the aim is not security but to move your DNS stream from your provider to Google and Cloudflare.

This is bad on many fronts, but lets start with the worst: On the internet, if you are not the paying customer, you are the product.

Remember that well. Many of you dislike your ISP (full disclosure - I work for one) , but it is a company that operates in the same country you do, and works by the same laws. Ideally, you have some political input to the legal framework it works under. And you are a paying customer, you have a contract.

Nothing of that holds true for Google or Cloudflare. You have no legal relation to them whatsoever, to most of the world's population they are foreign thugs, and they are completely unregulated. And they don't get a penny from you - making you just a filet piece in their offering to actually paying customers. Another eternal truth is that There Ain't No Such Thing As A Free Lunch. These companies need to make money - so the HAVE to sell you out, to someone, somehow.

My second objection is environmental. Basically, introducing cryptography where none is needed burns energy. And since that is large scale we can expect large scale cpu power needed to implement that shit. Even if we ignore client side, server side lets assume by very basic back-of-a-napkin calculation we currently need

about 2mW/user to provide DNS service (based on real world data, probably a little low). With DoH I expect that to increase by a factor 4 to five. Extrapolated to 4 billion internet users, this gets us something like 30 MW additional electrical power needed. That is the power requirement of a small town. This is not quite yet Satoshi-Nakamoto-sized bad, but still Google's brain fart visibly increases world power usage for no gain in a time we desperately need to reduce it, not to mention the thousands of additional servers that have to be built, transported, installed, de-installed, transported and scrapped every five to seven years for this alone.

My third objection may seem strange to you. Right now, in many countries DNS provides the angle to implement Internet censorship on behalf of those institutions that are powerful enough to force it. It is comparatively cheap and the obstacle it establishes is high enough to make it acceptable to those requiring it but low enough that most of us can live with it. It is foolish to assume that you can simply out-power those institutions. Most of us in the first world live one court decision away from something like the electronic Chinese wall implementing their local censorship regime. The widespread adoption of DoH might trigger this exact decision.

My fourth objection is quality. Google and Cloudflare sit somewhere, while we, the ISPs, sit directly where you connect. We can, and often do, implement better and lower-latency DNS service than Google and Cloudflare possibly could. Additionally, the cryptography inherent in DoH will probably cause additional, quite visible latency penalties. More DNS latency makes the Internet feel "slower" to you. Also the cryptography will increase complexity and, this, operational risk (leading to lower service availability) and increased attack surface for intruders, making DNS services more vulnerable and thus, also, less available and less trustworthy.

In sum, the DNS will be slower, and less trustworthy because of that, and it will fail more often.

And my fifth objection is auto-configuration and discovery. Google messes with a vast ecosystem of existing auto-configuration and discovery mechanisms. They barely have any answers to questions arising from that yet.

There probably are a few more, but that should suffice as an intro