Hi all,

Several days ago, I used

Something broke. The VPS would not boot:

A quick search brought me to this page:

FreeBSD 13.0 upgrade zfs: out of temporary buffer space

I used the emergency boot facility at the hosting company, Transip, to boot and issue that gpart command. It seems to have worked, but when I reboot I end up at the FreeBSD boot prompt.

Someone on Reddit suggesting booting again from the emergency environment and issuing these commands:

The disk on my VPS is vtbd0.

The ZFS root partition is: zroot/ROOT/13.0-RELEASE

How do I get my VPS to boot again? As I said above, I can't get any further than the boot prompt right now.

Thank you

Several days ago, I used

freebsd-update for what I thought was a routine minor upgrade to my VPS running v13.0 to bring it to p1.Something broke. The VPS would not boot:

ZFS: out of temporary buffer space

A quick search brought me to this page:

FreeBSD 13.0 upgrade zfs: out of temporary buffer space

It would appear that as part of the zpool upgrade that the boot code is not updated. There may have been a message about this but I didn't see it. I downloaded the FreeBSD 13-RELEASE mem stick image to a USB stick and booted that. You can repair the boot code with the following command

gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i<gpart index of freebsd-boot> <block device>

I used the emergency boot facility at the hosting company, Transip, to boot and issue that gpart command. It seems to have worked, but when I reboot I end up at the FreeBSD boot prompt.

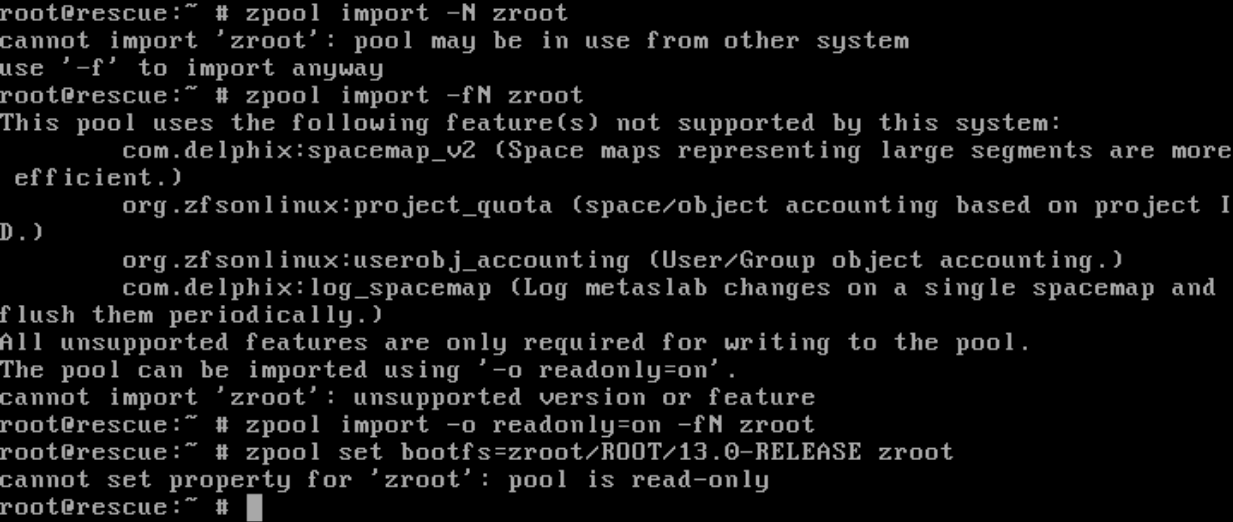

Someone on Reddit suggesting booting again from the emergency environment and issuing these commands:

- zpool import -N zroot

- zpool set bootfs=zroot/ROOT/13.0-RELEASE zroot

The disk on my VPS is vtbd0.

The ZFS root partition is: zroot/ROOT/13.0-RELEASE

How do I get my VPS to boot again? As I said above, I can't get any further than the boot prompt right now.

Thank you