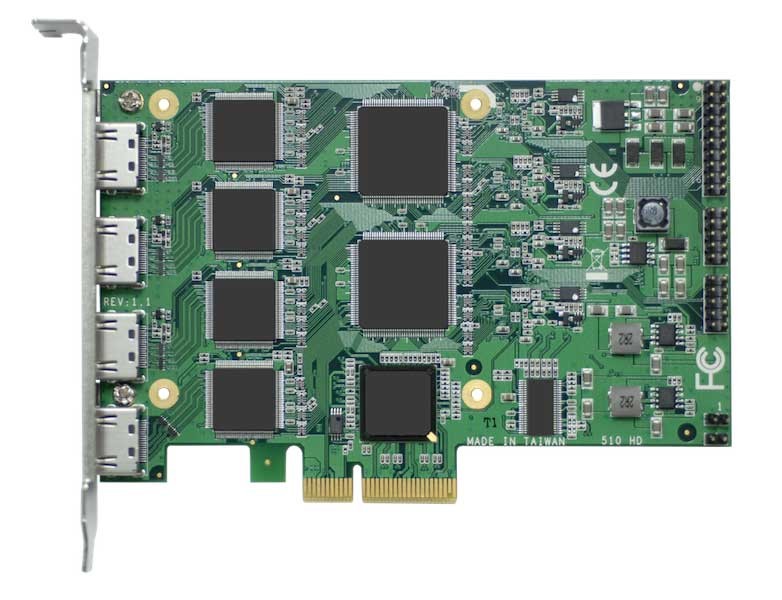

I guess the data in the source server is already compressed, if it's something like mp4. If you can do any further compression before the transfer, that might be worth exploring. For example can you reduce the video resolution during capture/encoding, or do you need the full resolution? Anything you can do to cut down the volume of data to be transferred must help, provided you have a sufficiently meaty cpu (and possibly hardware compression coprocessor) at the video capture side. What is the absolute minimum video resolution you need? You can of course get video compression accelerator cards like this, although I don't know if freebsd supports them.

DVP-7030HE is a PCIe-bus, software compression video capture card with 4 HDMI video and 4 audio inputs. DVP-7030HE supports H.264/MPEG4 compression format at up to Full HD 1080p for each channel. With an easy-to-use software development kit (SDK) and flexibility to stack multiple cards...

www.l-trondirect.com

Once you've captured and compressed it... using ssh for the transfer usually has some overhead because the data has to be encrypted/decrypted. Using rsync or ftp would avoid that, rsync is probably the preferred option nowadays. If the data is already highly compressed then ssh -C may not buy you much additional compression.

To increase the link bandwidth... investigate using the lagg(4) link aggregation driver to obtain a faster link between the source and destination machines. You can aggregate links to get a faster channel. It goes without saying that you want the fastest NICs and switch (if there is a switch and not a direct link) you can get. It all depends where the bottlenecks are, you need to find that by experiment.

And I guess optimising zfs and raid configuration on the target machine, but ralphbsz has already discussed that. That's probably the bottleneck once you've got the network bandwidth up and the data size minimised. There's some discussion here that might be useful

Hi, Previously i had a 1.5tb server made up of all SSDs (2x512gb,2x256gb) and it was pretty fast, i was only ever limited by the network speed. Now i have a 20tb server made up of 4x5tb drives (1 redundant), with a 512gb ssd as l2arc and one of the 256gb drives as boot drive, and 189gb DDR4 RAM...

forums.servethehome.com

Perhaps it's worth experimenting with building a 2-node freebsd HAST cluster as the target, which should increase the speed at which you can dump data onto the target.