Here's how you can migrate your ZFS zroot to a new disk (da1). First you will need a USB with the same FreeBSD version of the current running OS.

Boot from the FreeBSD installation and select Live system. Then login with root w/o password

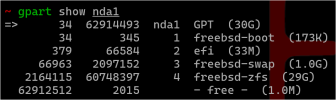

#The current running da0 disk which we going to migrate to a new disk (da1)

Code:

# gpart show

=> 40 266338224 da0 GPT (127G)

40 532480 1 efi (260M)

532520 1024 2 freebsd-boot (512K)

533544 984 - free - (492K)

534528 4194304 3 freebsd-swap (2.0G)

4728832 261607424 4 freebsd-zfs (125G)

266336256 2008 - free - (1.0M)

# camcontrol devlist

<Msft Virtual Disk 1.0> at scbus0 target 0 lun 0 (pass0,da0)

<Msft Virtual Disk 1.0> at scbus0 target 0 lun 1 (pass1,da1)

# Create a new partitioning scheme on the new disk

# Add a new efi system partition (ESP)

gpart add -a 4k -l efiboot0 -t efi -s 260M da1

# Format the ESP

# Add new Boot partition for Legacy boot (BIOS)

gpart add -a 4k -l gptboot0 -t freebsd-boot -s 512k da1

# Add the protective master boot record and bootcode

gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 2 da1

# Create new swap partition

gpart add -a 1m -l swap0 -t freebsd-swap -s 2G da1

# Create new ZFS partition to the rest of the disk space

gpart add -a 1m -l zfs0 -t freebsd-zfs da1

# mount the ESP partition

mount_msdosfs /dev/da1p1 /mnt

# Create the directories and copy the efi loader in the ESP

mkdir -p /mnt/efi/boot

mkdir -p /mnt/efi/freebsd

cp /boot/loader.efi /mnt/efi/boot/bootx64.efi

cp /boot/loader.efi /mnt/efi/boot/loader.efi

# Create the new UEFI boot variable and unmount the ESP

efibootmgr -a -c -l /mnt/efi/boot/loader.efi -L FreeBSD-14

umount /mnt

# Create mountpoint for zroot and zroot_new

mkdir /tmp/zroot

mkdir /tmp/zroot_new

# Create the new ZFS pool on the new disk (zroot_new)

zpool create -o altroot=/tmp/zroot_new -O compress=lz4 -O atime=off -m none -f zroot_new da1p4

# Import the original zroot

zpool import -R /tmp/zroot zroot

# Create a snapshot and send it to the zroot_new on the other disk.

zfs snapshot -r zroot@migration

zfs send -vR zroot@migration | zfs receive -Fdu zroot_new

# Export zroot and zroot_new and import again zroot_new under the new name (rename the zpool_new to zroot)

zpool export zroot

zpool export zroot_new

zpool import -R /tmp/zroot zroot_new zroot

# Set the default boot

zpool set bootfs=zroot/ROOT/default zroot

# cleanup the snapshot created for migration

zfs list -t snapshot -H -o name | grep migration | xargs -n1 zfs destroy

# export the pool

# Shut down and remove the old disk

# After the reboot select FreeBSD-14 from the UEFI and if everything is ok clean up the old UEFI record using efibootmgr