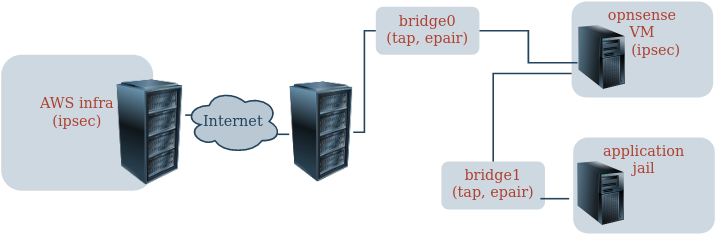

I am experiencing a weird problem: I cannot nat ipsec. I have a very basic setup: the host is FreeBSD with a opnsense VM and a vnet based jail. The hosts em0 is connected to the internet, then there is bridge0 with network 192.168.251.0/24 and with a tap interface of the opnsense VM (simulating the "WAN" interface) - this bridge does NAT all outgoing traffic. Then I have a bridge1 which is simulating the LAN network - the opnsense LAN interface as well as the jails epair is connected. Basic setup works: the jails traffic is going through opnsense which outputs the traffic via bridge0, the host then does the NAT and everything is fine.

All outgoing traffic is NATed, I can UDP e.g. with nc or drill as well as TCP and see the everything working. BUT: as soon as I have an ipsec tunnel established between our AWS infrastructure and the opnsense the traffic to the ipsec endpoint is not NATed. With the following rules:

the host does not NAT the packets. tcpdump on the pflog gives me:

The connection I was trying to establish was from the jail to a VM inside our AWS infrastructure. Of course the traffic is going through the ipsec tunnel where 3.124.19.154 is the IP address of AWS endpoint. Of course the firewall is blocking the rule, cause packets with source of our internal lan should not pass out through the physical interface.

I have absolutely no idea what's wrong and I am happy about any small hint, more so about bigger enlightenments. thanks!

All outgoing traffic is NATed, I can UDP e.g. with nc or drill as well as TCP and see the everything working. BUT: as soon as I have an ipsec tunnel established between our AWS infrastructure and the opnsense the traffic to the ipsec endpoint is not NATed. With the following rules:

Code:

nat pass on em0 proto udp from 192.168.251.100 to any -> $ip_out

nat pass on em0 proto tcp from 192.168.251.100 to any -> $ip_outthe host does not NAT the packets. tcpdump on the pflog gives me:

Code:

00:00:00.000012 rule 22/0(match): block out on em0: 192.168.251.100 > 3.124.19.154: ip-proto-17

00:00:00.339727 rule 22/0(match): block out on em0: 192.168.251.100.4500 > 3.124.19.154.4500: UDP-encap: ESP(spi=0xc4f0d1ee,seq=0x22), length 1272The connection I was trying to establish was from the jail to a VM inside our AWS infrastructure. Of course the traffic is going through the ipsec tunnel where 3.124.19.154 is the IP address of AWS endpoint. Of course the firewall is blocking the rule, cause packets with source of our internal lan should not pass out through the physical interface.

I have absolutely no idea what's wrong and I am happy about any small hint, more so about bigger enlightenments. thanks!