Recently I installed an 11.0 server with two drives and chose the install option that mirrored them using ZFS. The machine ran fine in the colo rack for a few weeks, then became unresponsive.

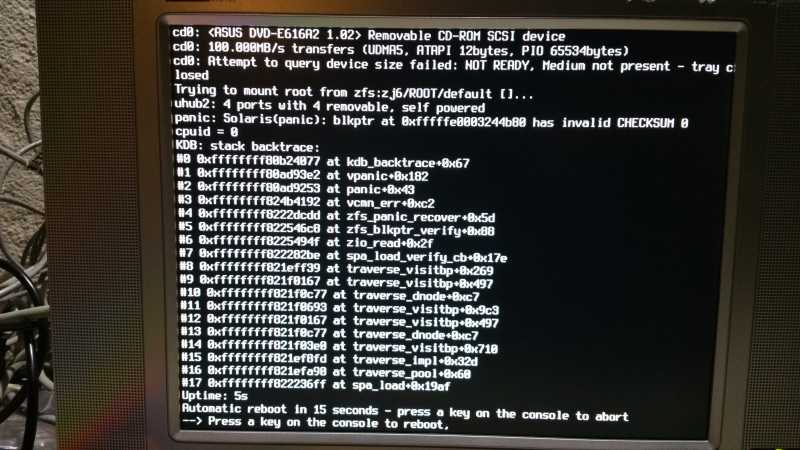

Ran fine for a few weeks sitting in the rack, then became unresponsive. Plugging in a console showed it was in a panic / boot loop due to ZFS.

Would like suggestions on what is wrong that caused this problem as well as how to proceed to recover from it.

I took a picture of the console screen, attached.

Ran fine for a few weeks sitting in the rack, then became unresponsive. Plugging in a console showed it was in a panic / boot loop due to ZFS.

Would like suggestions on what is wrong that caused this problem as well as how to proceed to recover from it.

I took a picture of the console screen, attached.