HI,

After an hardware failure (motherboard replacement) the server was reassembled. for the record I didn't pay any attention to disk order in the disk shelfs when placing the 4 drives back.

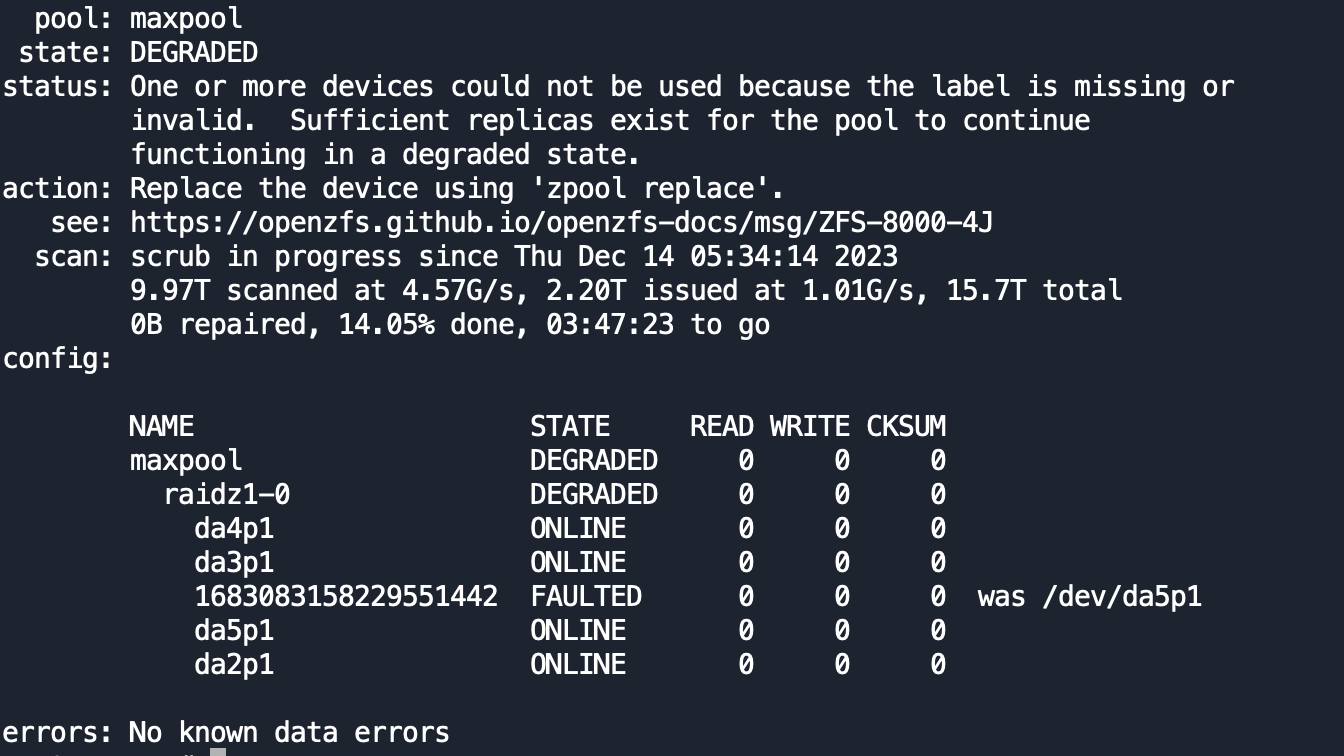

I have noticed that my raidz pool is in degraded state.

This pool was build with 4 drives not 5 and it seems like something to do with the order of the drive that might have changed.

Any idea how to fix that? camcontrol identify all the devices with no issue.

Thanks

After an hardware failure (motherboard replacement) the server was reassembled. for the record I didn't pay any attention to disk order in the disk shelfs when placing the 4 drives back.

I have noticed that my raidz pool is in degraded state.

This pool was build with 4 drives not 5 and it seems like something to do with the order of the drive that might have changed.

Any idea how to fix that? camcontrol identify all the devices with no issue.

Thanks