Due to unknown to me reasons I'm having issues while importing a zpool (RAID 0, two disks).

Whenever I issue the command

The only way I can successfully import the zpool (but don't know how to access the data in it) is when I connect the disks after startup and manually issue the following command:

Importing it in a non read-only mode causes the crash.

Now I have some critical data on that zpool and I need to restore it to a working state.

I have tried importing it on another machine and had the exact same issue: machine goes error and then KO.

How can i recover that pool without losing data? (looking around on forums and stuff hasn't lead me anywhere so far...)

Whenever I issue the command

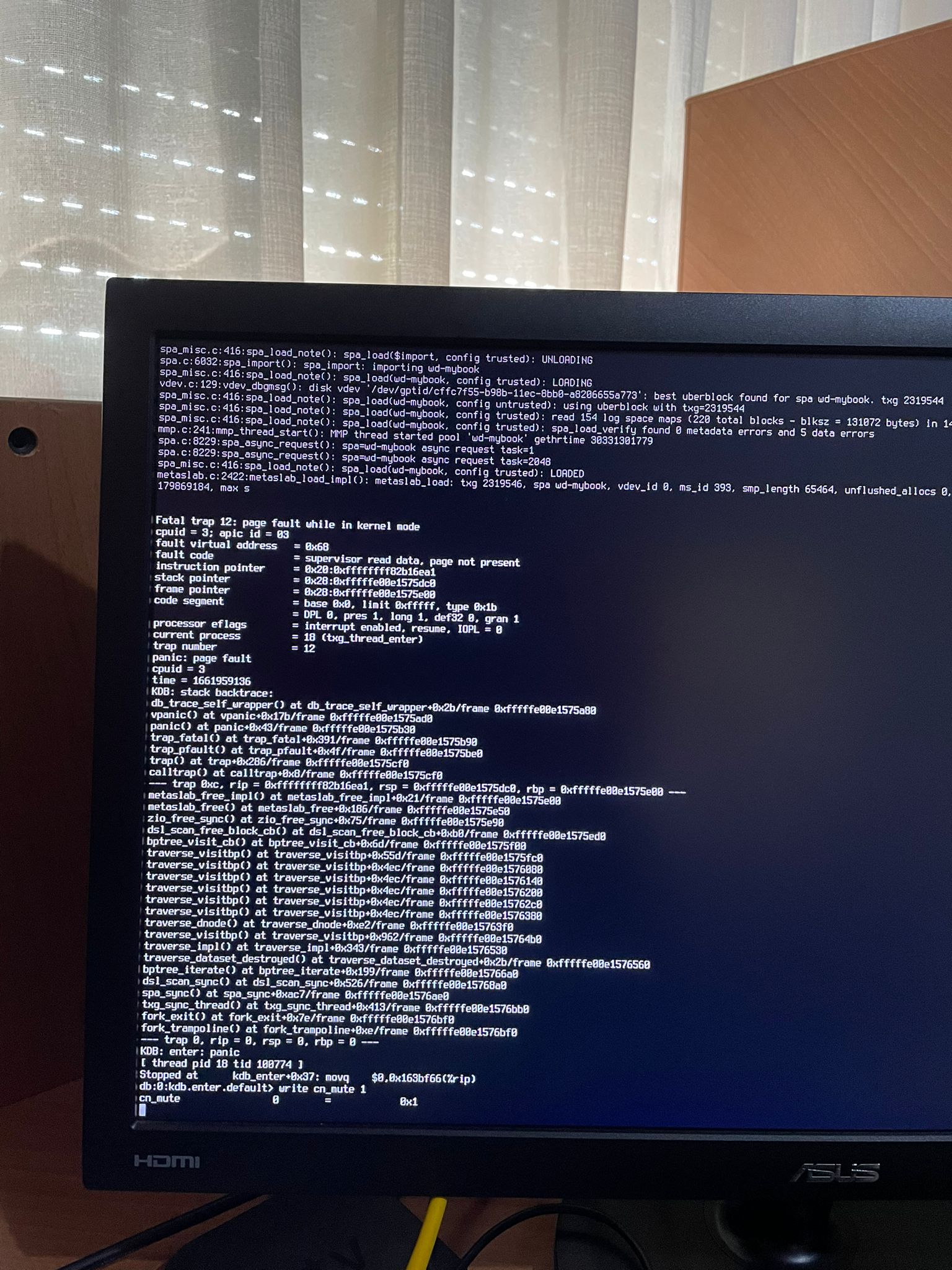

the system crashes and reboots showing this error:zpool import wd-mybook

The only way I can successfully import the zpool (but don't know how to access the data in it) is when I connect the disks after startup and manually issue the following command:

zpool import -f -F -o readonly=on

Importing it in a non read-only mode causes the crash.

Now I have some critical data on that zpool and I need to restore it to a working state.

I have tried importing it on another machine and had the exact same issue: machine goes error and then KO.

How can i recover that pool without losing data? (looking around on forums and stuff hasn't lead me anywhere so far...)