I have a zpool on a Dell 730xd used as a dedicated storage server with 64GB of memory that looks like this:

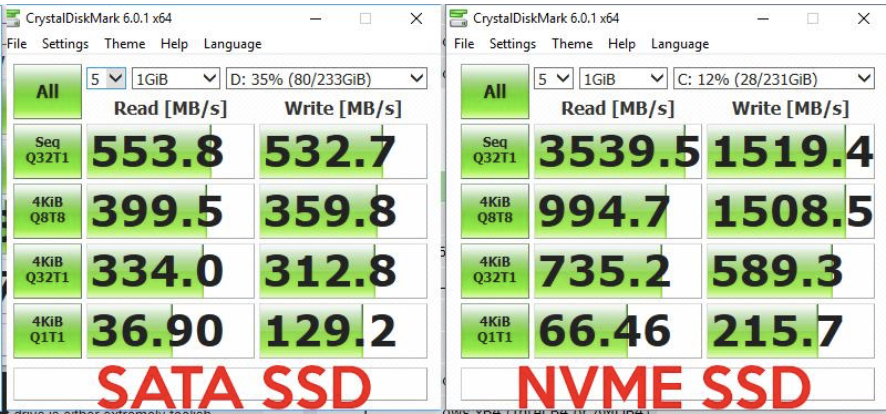

Every disk is a SATA6 SSD.

The contents of the pool consists solely of Proxmox virtual machine volumes mounted over iSCSI:

My question is, given that the pool is composed on SSD drives, is it worth adding a mirrored NVMe SSD as ZIL and L2ARC given the additional speed of NVMe?

Code:

NAME STATE READ WRITE CKSUM

ssdpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

wwn-0x5002538e40be14b3 ONLINE 0 0 0

wwn-0x5002538e40be1606 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

wwn-0x500a07511c75d788 ONLINE 0 0 0

wwn-0x500a07511c75da09 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

wwn-0x500a075115b56adf ONLINE 0 0 0

wwn-0x500a075115b56b2a ONLINE 0 0 0Every disk is a SATA6 SSD.

The contents of the pool consists solely of Proxmox virtual machine volumes mounted over iSCSI:

Code:

NAME USED AVAIL REFER MOUNTPOINT

ssdpool 4.36T 2.75T 26.5K /ssdpool

ssdpool/vm-100-disk-0 364M 2.75T 353M -

ssdpool/vm-100-disk-1 2.54T 2.75T 1.56T -

ssdpool/vm-101-disk-0 11.7G 2.75T 7.69G -

ssdpool/vm-101-disk-2 7.05G 2.75T 4.44G -

ssdpool/vm-102-disk-0 18.7G 2.75T 13.7G -

ssdpool/vm-102-disk-1 8.70G 2.75T 6.79G -

ssdpool/vm-103-disk-0 8.06G 2.75T 5.87G -

ssdpool/vm-103-disk-1 8.75G 2.75T 5.94G -

ssdpool/vm-103-state-pre_update 1.41G 2.75T 1.41G -

ssdpool/vm-104-disk-0 8.47G 2.75T 4.47G -

ssdpool/vm-104-disk-1 77.9G 2.75T 42.7G -

ssdpool/vm-106-disk-0 5.09G 2.75T 4.83G -

ssdpool/vm-106-disk-1 309G 2.75T 293G -

ssdpool/vm-107-disk-0 6.19G 2.75T 6.19G -

ssdpool/vm-108-disk-0 13.9G 2.75T 7.89G -

ssdpool/vm-109-disk-0 5.40G 2.75T 3.77G -

ssdpool/vm-109-state-sup 2.88G 2.75T 2.88G -

ssdpool/vm-110-disk-0 642G 2.75T 339G -

ssdpool/vm-110-disk-1 48.1G 2.75T 48.1G -

ssdpool/vm-110-state-April_13_2020 15.3G 2.75T 15.3G -

ssdpool/vm-111-disk-0 6.94G 2.75T 6.90G -

ssdpool/vm-112-disk-0 6.27G 2.75T 6.03G -

ssdpool/vm-112-disk-1 1.99G 2.75T 1.85G -

ssdpool/vm-113-disk-0 157G 2.75T 91.1G -

ssdpool/vm-114-disk-0 167G 2.75T 91.2G -

ssdpool/vm-115-disk-0 6.91G 2.75T 6.91G -

ssdpool/vm-116-disk-0 93.0G 2.75T 92.8G -

ssdpool/vm-117-disk-0 1.70G 2.75T 1.61G -

ssdpool/vm-118-disk-0 169G 2.75T 101G -

ssdpool/vm-120-disk-0 8.16G 2.75T 6.97G -

ssdpool/vm-120-state-before_20200407 3.42G 2.75T 3.42G -

ssdpool/vm-120-state-pre_demo 2.11G 2.75T 2.11G -

ssdpool/vm-121-disk-0 9.43G 2.75T 9.43G -

ssdpool/vm-122-disk-0 2.05G 2.75T 2.05G -

ssdpool/vm-130-disk-0 7.77G 2.75T 7.77G -

ssdpool/vm-130-disk-1 16.9G 2.75T 16.9G -