Hi,

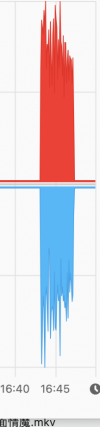

I created new zfs dataset for share via samba, but when I copy file It started a normal speed (100MB/s), but it will down to 20MB/s soon. I checked copy to a Windows share was always 100mb/s. samba version 4.13.17. What's the problem?

My zfs option was compress=lz4 and dedup=on. and Memory was 24GB should be enough for run zfs.

smb4.conf

I created new zfs dataset for share via samba, but when I copy file It started a normal speed (100MB/s), but it will down to 20MB/s soon. I checked copy to a Windows share was always 100mb/s. samba version 4.13.17. What's the problem?

My zfs option was compress=lz4 and dedup=on. and Memory was 24GB should be enough for run zfs.

smb4.conf

Code:

[global]

workgroup = WORKGROUP

server string = Samba Server Version %v

wins support = Yes

security = user

[share1]

path=

valid users =

writable = yesAttachments

Last edited by a moderator: