How can I automatically generate a sitemap of a website as a text file? A sitemap.txt file that only contains links.

It can be done manually, but maybe a shell or other script could do it? There's nothing in ports for this.

An automatically created XML sitemap could be useful too, but I'm more interested in a txt sitemap to be generated from the FreeBSD command-line. That I temporarily forgot what I had, and that is still available, maybe this isn't so urgent for me anymore.

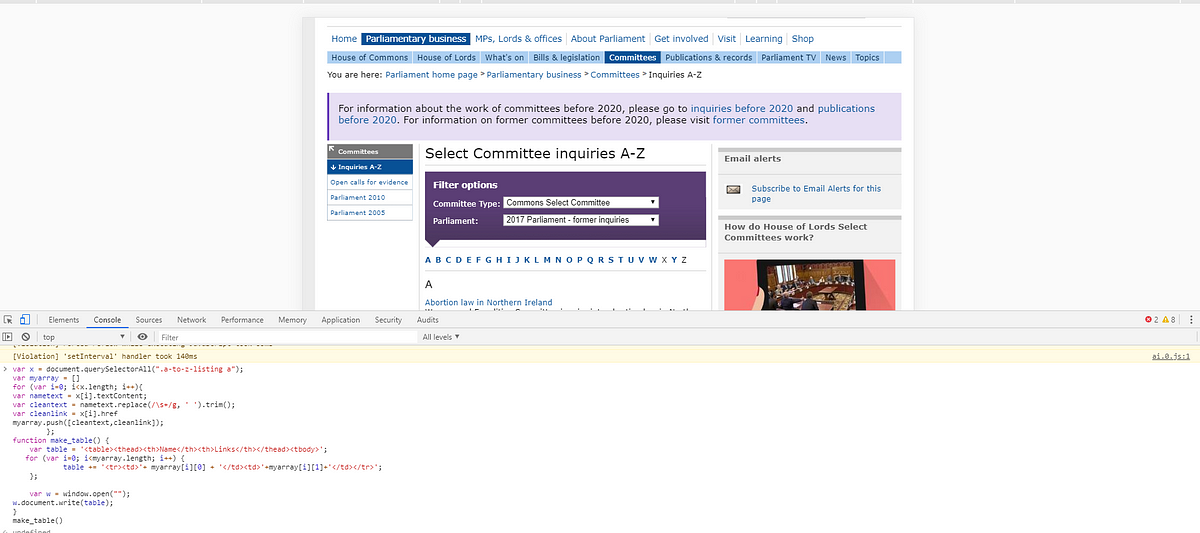

I copied an adjusted a php file on a website, when I type that web address in, it made a txt sitemap in that directory. I guess a php file could be used from my computer as well. I'm more familiar with Perl scripts from ports doing similar tasks.

It can be done manually, but maybe a shell or other script could do it? There's nothing in ports for this.

An automatically created XML sitemap could be useful too, but I'm more interested in a txt sitemap to be generated from the FreeBSD command-line. That I temporarily forgot what I had, and that is still available, maybe this isn't so urgent for me anymore.

I copied an adjusted a php file on a website, when I type that web address in, it made a txt sitemap in that directory. I guess a php file could be used from my computer as well. I'm more familiar with Perl scripts from ports doing similar tasks.

<?php

set_time_limit(0);

$page_root = '/directory'; //no change this

$website = "https://mywebsite.net"; //your website address, change this

$textfile="sitemap.txt"; //your sitemap text file name, no need change

// list of file filters

$filefilters[]=".php";

$filefilters[]=".shtml";

// list of disallowed directories

$disallow_dir[] = "unused";

// list of disallowed file types

$disallow_file[] = ".bat";

$disallow_file[] = ".asp";

$disallow_file[] = ".old";

$disallow_file[] = ".save";

$disallow_file[] = ".txt";

$disallow_file[] = ".js";

$disallow_file[] = "~";

$disallow_file[] = ".LCK";

$disallow_file[] = ".zip";

$disallow_file[] = ".ZIP";

$disallow_file[] = ".CSV";

$disallow_file[] = ".csv";

$disallow_file[] = ".css";

$disallow_file[] = ".class";

$disallow_file[] = ".jar";

$disallow_file[] = ".mno";

$disallow_file[] = ".bak";

$disallow_file[] = ".lck";

$disallow_file[] = ".BAK";

$disallow_file[] = ".bk";

$disallow_file[] = "mksitemap";

// simple compare function: equals

function ar_contains($key, $array) {

foreach ($array as $val) {

if ($key == $val) {

return true;

}

}

return false;

}

// better compare function: contains

function fl_contains($key, $array) {

foreach ($array as $val) {

// echo $key.'-------'.$val.'<br>';

$pos = strpos($key, $val);

if ($pos == FALSE) continue;

return true;

}

return false;

}

// this function changes a substring($old_offset) of each array element to $offset

function changeOffset($array, $old_offset, $offset) {

$res = array();

foreach ($array as $val) {

$res[] = str_replace($old_offset, $offset, $val);

}

return $res;

}

// this walks recursivly through all directories starting at page_root and

// adds all files that fits the filter criterias

// taken from Lasse Dalegaard, http://php.net/opendir

function getFiles($directory, $directory_orig = "", $directory_offset="") {

global $disallow_dir, $disallow_file, $allow_dir,$filefilters,$timebegin;

if ($directory_orig == "") $directory_orig = $directory;

if($dir = opendir($directory)) {

// Create an array for all files found

$tmp = Array();

// Add the files

while($file = readdir($dir)) {

// Make sure the file exists

if($file != "." && $file != ".." && $file[0] != '.' ) {

// If it's a directiry, list all files within it

if(is_dir($directory . "/" . $file)) {

$disallowed_abs = fl_contains($directory."/".$file, $disallow_dir); // handle directories with pathes

$disallowed = ar_contains($file, $disallow_dir); // handle directories only without pathes

$allowed_abs = fl_contains($directory."/".$file, $allow_dir);

$allowed = ar_contains($file, $allow_dir);

if ($disallowed || $disallowed_abs) continue;

//if ($allowed_abs || $allowed)

{

$tmp2 = changeOffset(getFiles($directory . "/" . $file, $directory_orig, $directory_offset), $directory_orig, $directory_offset);

if(is_array($tmp2)) {

$tmp = array_merge($tmp, $tmp2);

}

}

} else { // files

if (fl_contains($file, $filefilters)==false) continue;

if (fl_contains($file, $disallow_file)) continue;

array_push($tmp, str_replace($directory_orig, $directory_offset, $directory."/".$file));

}

}

}

// Finish off the function

closedir($dir);

return $tmp;

}

}

function WriteTextFile($filename,$somecontent)

{

if (is_writable($filename)) {

// In our example we're opening $filename in append mode.

// The file pointer is at the bottom of the file hence

// that's where $somecontent will go when we fwrite() it.

if (!$handle = fopen($filename, 'w')) {

echo "Cannot open file ($filename)<br>";

return false;

}

// Write $somecontent to our opened file.

if (fwrite($handle, $somecontent) === FALSE) {

echo "Cannot write to file ($filename)<br>";

return false;

}

//echo "Success, wrote ($somecontent) to file ($filename)";

fclose($handle);

} else {

echo "The file $filename is not writable<br>";

}

return true;

}

function replacespecailstr($srcstr)

{

$srcstr=str_replace('&','&',$srcstr);

return $srcstr;

}

echo 'Getting File List...<br>';

$a = getFiles($page_root);

echo 'Creating Text Sitemap...<br>';

$text='';

$texthandle = fopen($textfile, 'w');

$count=0;

foreach ($a as $file) {

$text=str_replace("%2F","/",$website.rawurlencode(str_replace(".php", "", $file)));

if(!fwrite($texthandle,$text."\r\n"))

{

echo 'can not write '.$text.' <br>';

break;

}

$count++;

}

fclose($texthandle);

echo 'create ok, count='.$count.'<br>';

?>

set_time_limit(0);

$page_root = '/directory'; //no change this

$website = "https://mywebsite.net"; //your website address, change this

$textfile="sitemap.txt"; //your sitemap text file name, no need change

// list of file filters

$filefilters[]=".php";

$filefilters[]=".shtml";

// list of disallowed directories

$disallow_dir[] = "unused";

// list of disallowed file types

$disallow_file[] = ".bat";

$disallow_file[] = ".asp";

$disallow_file[] = ".old";

$disallow_file[] = ".save";

$disallow_file[] = ".txt";

$disallow_file[] = ".js";

$disallow_file[] = "~";

$disallow_file[] = ".LCK";

$disallow_file[] = ".zip";

$disallow_file[] = ".ZIP";

$disallow_file[] = ".CSV";

$disallow_file[] = ".csv";

$disallow_file[] = ".css";

$disallow_file[] = ".class";

$disallow_file[] = ".jar";

$disallow_file[] = ".mno";

$disallow_file[] = ".bak";

$disallow_file[] = ".lck";

$disallow_file[] = ".BAK";

$disallow_file[] = ".bk";

$disallow_file[] = "mksitemap";

// simple compare function: equals

function ar_contains($key, $array) {

foreach ($array as $val) {

if ($key == $val) {

return true;

}

}

return false;

}

// better compare function: contains

function fl_contains($key, $array) {

foreach ($array as $val) {

// echo $key.'-------'.$val.'<br>';

$pos = strpos($key, $val);

if ($pos == FALSE) continue;

return true;

}

return false;

}

// this function changes a substring($old_offset) of each array element to $offset

function changeOffset($array, $old_offset, $offset) {

$res = array();

foreach ($array as $val) {

$res[] = str_replace($old_offset, $offset, $val);

}

return $res;

}

// this walks recursivly through all directories starting at page_root and

// adds all files that fits the filter criterias

// taken from Lasse Dalegaard, http://php.net/opendir

function getFiles($directory, $directory_orig = "", $directory_offset="") {

global $disallow_dir, $disallow_file, $allow_dir,$filefilters,$timebegin;

if ($directory_orig == "") $directory_orig = $directory;

if($dir = opendir($directory)) {

// Create an array for all files found

$tmp = Array();

// Add the files

while($file = readdir($dir)) {

// Make sure the file exists

if($file != "." && $file != ".." && $file[0] != '.' ) {

// If it's a directiry, list all files within it

if(is_dir($directory . "/" . $file)) {

$disallowed_abs = fl_contains($directory."/".$file, $disallow_dir); // handle directories with pathes

$disallowed = ar_contains($file, $disallow_dir); // handle directories only without pathes

$allowed_abs = fl_contains($directory."/".$file, $allow_dir);

$allowed = ar_contains($file, $allow_dir);

if ($disallowed || $disallowed_abs) continue;

//if ($allowed_abs || $allowed)

{

$tmp2 = changeOffset(getFiles($directory . "/" . $file, $directory_orig, $directory_offset), $directory_orig, $directory_offset);

if(is_array($tmp2)) {

$tmp = array_merge($tmp, $tmp2);

}

}

} else { // files

if (fl_contains($file, $filefilters)==false) continue;

if (fl_contains($file, $disallow_file)) continue;

array_push($tmp, str_replace($directory_orig, $directory_offset, $directory."/".$file));

}

}

}

// Finish off the function

closedir($dir);

return $tmp;

}

}

function WriteTextFile($filename,$somecontent)

{

if (is_writable($filename)) {

// In our example we're opening $filename in append mode.

// The file pointer is at the bottom of the file hence

// that's where $somecontent will go when we fwrite() it.

if (!$handle = fopen($filename, 'w')) {

echo "Cannot open file ($filename)<br>";

return false;

}

// Write $somecontent to our opened file.

if (fwrite($handle, $somecontent) === FALSE) {

echo "Cannot write to file ($filename)<br>";

return false;

}

//echo "Success, wrote ($somecontent) to file ($filename)";

fclose($handle);

} else {

echo "The file $filename is not writable<br>";

}

return true;

}

function replacespecailstr($srcstr)

{

$srcstr=str_replace('&','&',$srcstr);

return $srcstr;

}

echo 'Getting File List...<br>';

$a = getFiles($page_root);

echo 'Creating Text Sitemap...<br>';

$text='';

$texthandle = fopen($textfile, 'w');

$count=0;

foreach ($a as $file) {

$text=str_replace("%2F","/",$website.rawurlencode(str_replace(".php", "", $file)));

if(!fwrite($texthandle,$text."\r\n"))

{

echo 'can not write '.$text.' <br>';

break;

}

$count++;

}

fclose($texthandle);

echo 'create ok, count='.$count.'<br>';

?>