Nice thread.

Ben Eater shows you how a small CPU and peripheral components interact, and can be programmed in a simple way (assembler). A nice impression and Q & A of a breadboard CPU is perhaps Tech Talk on Building a Custom TTL CPU

If you want less or no actual hardware and want to concentrate on the logical CPU design, you could opt for design in a hardware description language with simulation, something in VHDL or comparable probably*. I don’t have any current references for that however.

For an overview, I can recommend Code: The Hidden Language of Computer Hardware and Software, 2nd edition - Announcing “Code” 2nd Edition. That may not cover a hands-on practical experience but it does give an overview that addresses your original broad question. Have a look at the contents and preview text; also the companion website that contains interactive examples.

Along the span of low-level hardware (basic TTL circuits) to driver software interactions with the kernel, I suggest you pick a place of interest to start and see what you actually like spending time on.

___

* pre-VHDL during my studies a long time ago, I followed a course in university where we designed and simulated parts of a CPU in APL, including the system clock timing signals. Prof. Gerrit Blaauw, a co-designer of the IBM System/360 architecture, gave the course.

edit: less broad than your question but, as you are already engaged in FreeBSD and mentioned device drivers, I think it's at least worth mentioning:

Depending on what you actually want to learn that is quite a span of (domain) knowledge.[...] fundamentals of how computer work at the material level and how it is related to driver kernel and software.

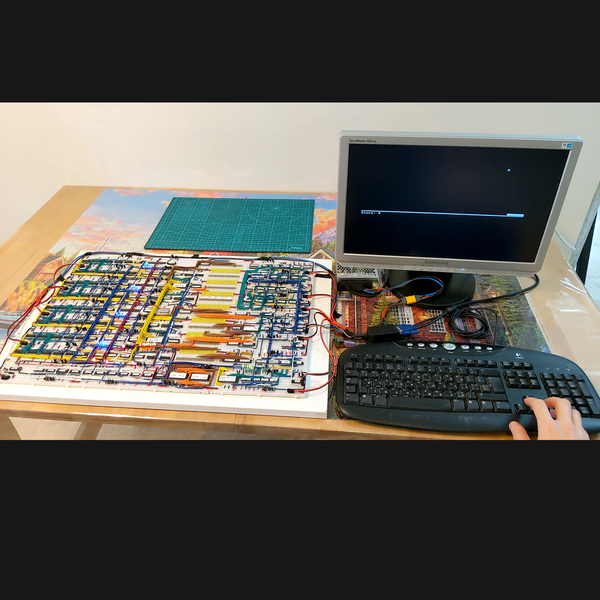

I think so too. Consider also, hardware projects with “lots of wires” could get you bogged down by electronics/timing issues that have little to do with logical design. I think precise stepped projects like those great ones from Ben Eater could be a good basis to steer you clear of those (mostly). With an Arduino kit you’ll get a lot less wires.[...] However, trying to learn a CPU from logic gates up is very challenging.

Ben Eater shows you how a small CPU and peripheral components interact, and can be programmed in a simple way (assembler). A nice impression and Q & A of a breadboard CPU is perhaps Tech Talk on Building a Custom TTL CPU

If you want less or no actual hardware and want to concentrate on the logical CPU design, you could opt for design in a hardware description language with simulation, something in VHDL or comparable probably*. I don’t have any current references for that however.

For an overview, I can recommend Code: The Hidden Language of Computer Hardware and Software, 2nd edition - Announcing “Code” 2nd Edition. That may not cover a hands-on practical experience but it does give an overview that addresses your original broad question. Have a look at the contents and preview text; also the companion website that contains interactive examples.

Along the span of low-level hardware (basic TTL circuits) to driver software interactions with the kernel, I suggest you pick a place of interest to start and see what you actually like spending time on.

___

* pre-VHDL during my studies a long time ago, I followed a course in university where we designed and simulated parts of a CPU in APL, including the system clock timing signals. Prof. Gerrit Blaauw, a co-designer of the IBM System/360 architecture, gave the course.

edit: less broad than your question but, as you are already engaged in FreeBSD and mentioned device drivers, I think it's at least worth mentioning:

- The Design and Implementation of the FreeBSD Operating System, 2nd edition by Marshall Kirk McKusick, George V. Neville-Neil

- FreeBSD Device Drivers by Joseph Kong