Hello,

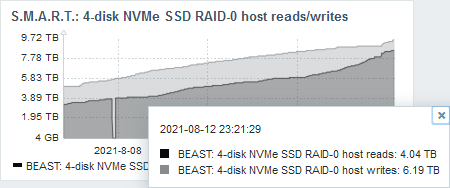

I have another question about the GEOM RAID class. I'm currently using it to join four PCIe 4.0 NVMe SSDs together in a RAID-0 for temporary data, no parity calculations involved. Load type on the device is both linear as well as random I/O, pretty somewhat equally distributed between reads and writes:

Read/Write distribution on the array

Layout: 4 physical disks <-> GEOM RAID <-> GPT partition <-> UFS.

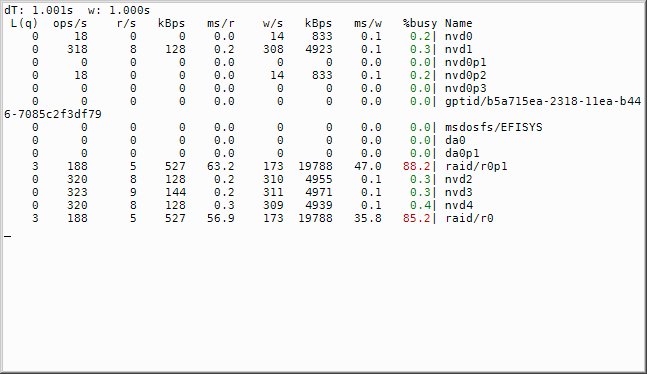

gstat showing unusually high %busy values on the GEOM RAID device and its partition

So as mentioned, raid/r0 is the device, raid/r0p1 is the UFS-formatted GPT partition on it, and nvd1, nvd2, nvd3 & nvd4 are the GEOM RAID component disks. Both the partition/filesystem as well as the raw graid device have those high %busy states when doing some I/O on the array. In the case where this screenshot was taken, said load was one thread writing a file to the machine via Samba/CIFS over just GBit ethernet. Transfer rates were as low as 20-30MiB/s!

All Samba server processes where running at real-time priority level 30 to ensure it wouldn't slow down the transfer. There are far fewer Samba processes than cores/threads on the machine, so raising them to real-time priority is not starving anything to death. It's done like this as superuser:

With no CPU load present, linear transfer rates can exceed 4GiB/s (locally of course), with network transfers in the 70-80MiB/s range, but no dice under heavy CPU load, where it's much slower, with IOPS dropping by a factor of about 10 and linear transfers by a factor of around 8 according to the simple diskinfo benchmarks.

The UFS partition /dev/nvd0p2 on the single /dev/nvd0 SSD is entirely unaffected by this, so it's only happening with GEOM RAID involved. The single /dev/nvd0 disk is a Corsair Force mp600 2TB, whereas the GEOM RAID component disks are of the same make, just one notch smaller: 4 × Corsair Force mp600 1TB.

Is there a way that I can ensure I/O stays fast and snappy on graid level 0 devices even under very high CPU loads? If yes, how can I do it?

Thank you very much!

I have another question about the GEOM RAID class. I'm currently using it to join four PCIe 4.0 NVMe SSDs together in a RAID-0 for temporary data, no parity calculations involved. Load type on the device is both linear as well as random I/O, pretty somewhat equally distributed between reads and writes:

Read/Write distribution on the array

Layout: 4 physical disks <-> GEOM RAID <-> GPT partition <-> UFS.

- The RAID has been created like this (command is off the top of my head, but should be correct according to

$ diskinfo /dev/raid/r0):# graid label -s 65536 DDF BEASTRAID RAID0 /dev/nvd1 /dev/nvd2 /dev/nvd3 /dev/nvd4 - The partition has been created like this:

# gpart add -t freebsd-ufs -a 65536 -b 64 -l BEASTRAID /dev/raid/r0 - Finally, the file system has been created like this:

# newfs -E -L BEASTRAID -b 16384 -d 65536 -f 16384 -g 1073741824 -h 32 -t -U /dev/raid/r0p1, tuned towards my workload and file/folder structures to the best of my knowledge.

gstat showing unusually high %busy values on the GEOM RAID device and its partition

So as mentioned, raid/r0 is the device, raid/r0p1 is the UFS-formatted GPT partition on it, and nvd1, nvd2, nvd3 & nvd4 are the GEOM RAID component disks. Both the partition/filesystem as well as the raw graid device have those high %busy states when doing some I/O on the array. In the case where this screenshot was taken, said load was one thread writing a file to the machine via Samba/CIFS over just GBit ethernet. Transfer rates were as low as 20-30MiB/s!

All Samba server processes where running at real-time priority level 30 to ensure it wouldn't slow down the transfer. There are far fewer Samba processes than cores/threads on the machine, so raising them to real-time priority is not starving anything to death. It's done like this as superuser:

Code:

ps ax | grep 'smbd' | grep -v 'grep' | sed 's/^ *//g' | cut -d' ' -f1 | while read -r pid; do

rtprio 30 -"${pid}"

done

ps ax | grep 'nmbd' | grep -v 'grep' | sed 's/^ *//g' | cut -d' ' -f1 | while read -r pid; do

rtprio 30 -"${pid}"

doneWith no CPU load present, linear transfer rates can exceed 4GiB/s (locally of course), with network transfers in the 70-80MiB/s range, but no dice under heavy CPU load, where it's much slower, with IOPS dropping by a factor of about 10 and linear transfers by a factor of around 8 according to the simple diskinfo benchmarks.

The UFS partition /dev/nvd0p2 on the single /dev/nvd0 SSD is entirely unaffected by this, so it's only happening with GEOM RAID involved. The single /dev/nvd0 disk is a Corsair Force mp600 2TB, whereas the GEOM RAID component disks are of the same make, just one notch smaller: 4 × Corsair Force mp600 1TB.

Is there a way that I can ensure I/O stays fast and snappy on graid level 0 devices even under very high CPU loads? If yes, how can I do it?

Thank you very much!