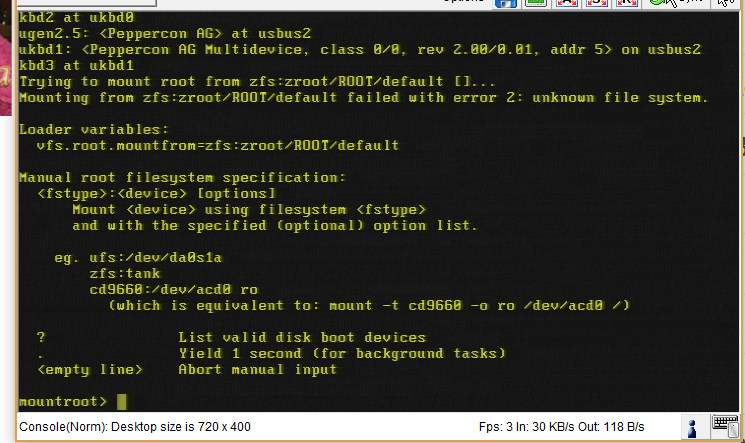

I have a server with FreeBSD 10.3 that I am suddenly unable to reboot. The server has been working fine for over 6 months but it not rebooted it since about 5 months ago, until now. I do not remeber ever upgrading the server and there is no old kernel in /boot to suggest that the Kernel has been updated. I end up in mountroot.

When I boot into Rescue (the server is on Hetzner) I am able to mount the entire pool with the command:

All commands below are run from the Rescue after the zpool import:

I could not see anything odd in dmesg (included as .txt)

Please help me solve this problem. I also have KVM access if needed. Let me know if you need any other info. That pool contains 6 TB of very important data.

When I boot into Rescue (the server is on Hetzner) I am able to mount the entire pool with the command:

zpool import -o altroot=/mnt zrootAll commands below are run from the Rescue after the zpool import:

Code:

[root@rescue /mnt]# cat /mnt/boot/loader.conf

kern.geom.label.gptid.enable="0"

zfs_load="YES"

vfs.root.mountfrom="zfs:zroot/ROOT/default"

Code:

[root@rescue /mnt/boot]# mount

zroot/ROOT/default on /mnt (zfs, local, noatime, nfsv4acls)

zroot/tmp on /mnt/tmp (zfs, local, noatime, nosuid, nfsv4acls)

zroot/usr/home on /mnt/usr/home (zfs, local, noatime, nfsv4acls)

zroot/usr/ports on /mnt/usr/ports (zfs, local, noatime, nosuid, nfsv4acls)

zroot/usr/src on /mnt/usr/src (zfs, local, noatime, nfsv4acls)

zroot/var/audit on /mnt/var/audit (zfs, local, noatime, noexec, nosuid, nfsv4acls)

zroot/var/crash on /mnt/var/crash (zfs, local, noatime, noexec, nosuid, nfsv4acls)

zroot/var/log on /mnt/var/log (zfs, local, noatime, noexec, nosuid, nfsv4acls)

zroot/var/mail on /mnt/var/mail (zfs, local, nfsv4acls)

zroot/var/tmp on /mnt/var/tmp (zfs, local, noatime, nosuid, nfsv4acls)

zroot on /mnt/zroot (zfs, local, noatime, nfsv4acls)

Code:

[root@rescue /mnt]# cat /mnt/etc/rc.conf

hostname="stream"

keymap="swedish.iso.kbd"

ifconfig_em0="DHCP"

ifconfig_em0_ipv6="inet6 accept_rtadv"

sshd_enable="YES"

powerd_enable="YES"

# Set dumpdev to "AUTO" to enable crash dumps, "NO" to disable

dumpdev="AUTO"

zfs_enable="YES"

lighttpd_enable=YES

Code:

[root@rescue /mnt]# zpool list

NAME SIZE ALLOC FREE EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

zroot 8.12T 5.71T 2.42T - 23% 70% 1.00x ONLINE /mnt

Code:

[root@rescue /mnt]# gpart show

=> 34 17578327997 mfid0 GPT (8.2T)

34 6 - free - (3.0K)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 4194304 2 freebsd-swap (2.0G)

4196352 17574129664 3 freebsd-zfs (8.2T)

17578326016 2015 - free - (1.0M)

Code:

[root@rescue /mnt/boot]# zfs list

NAME USED AVAIL REFER MOUNTPOINT

zroot 5.71T 2.16T 96K /mnt/zroot

zroot/ROOT 5.71T 2.16T 96K none

zroot/ROOT/default 5.71T 2.16T 5.71T /mnt

zroot/tmp 112K 2.16T 112K /mnt/tmp

zroot/usr 688M 2.16T 96K /mnt/usr

zroot/usr/home 5.51M 2.16T 5.51M /mnt/usr/home

zroot/usr/ports 682M 2.16T 682M /mnt/usr/ports

zroot/usr/src 96K 2.16T 96K /mnt/usr/src

zroot/var 5.91M 2.16T 96K /mnt/var

zroot/var/audit 96K 2.16T 96K /mnt/var/audit

zroot/var/crash 96K 2.16T 96K /mnt/var/crash

zroot/var/log 520K 2.16T 520K /mnt/var/log

zroot/var/mail 5.03M 2.16T 5.03M /mnt/var/mail

zroot/var/tmp 96K 2.16T 96K /mnt/var/tmpI could not see anything odd in dmesg (included as .txt)

Please help me solve this problem. I also have KVM access if needed. Let me know if you need any other info. That pool contains 6 TB of very important data.

Attachments

Last edited by a moderator: