Hey Guys!

I'm currently trying to fix/recover a broken zroot pool.

My Setup:

I rebooted the server and then I got the ZFS: i/o error - all block copies unavailable error message... (with da0 and da1 and only da0)

I also tried to import the pool on macOS with openZFS

and

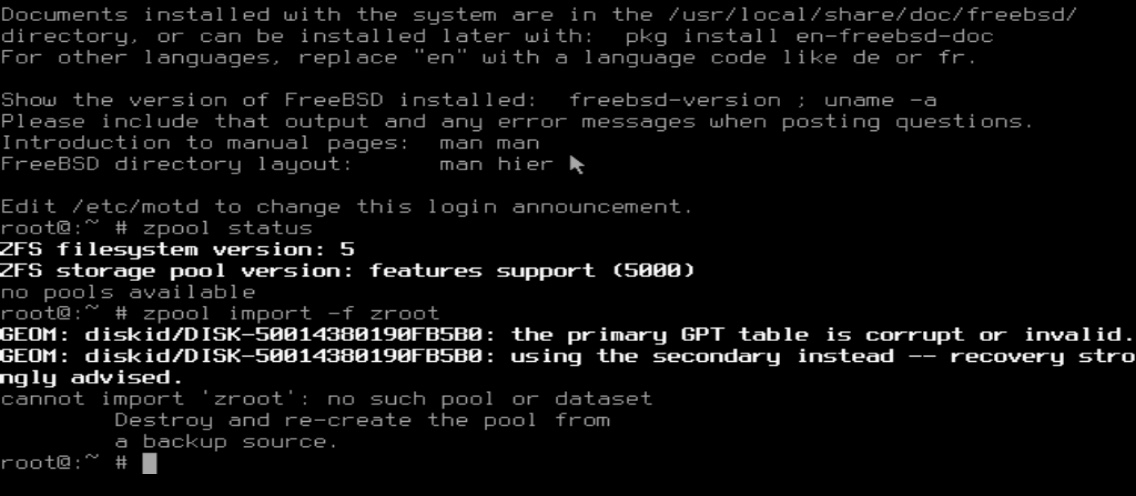

I booted the server from a 11.2-RELEASE USB Stick (Live) and then I tried to import the pool

Now comes the part I don't fully understand.

It shows that the primary GPT table is corrupt or invalid, but when I check with

Thanks in advance

I'm currently trying to fix/recover a broken zroot pool.

My Setup:

- Drives: 2x Samsung 850EVO 250GB SSD's (da0 and da1)

- Encryption: No

- RootOnZFS: YES

zpool status zroot showed that the 1st SSD (da0) was ONLINE and without errors and the 2nd SSD (da1) DEGRADED. gpart show da1 showed GPT header corrupt (or something like that) and da0 did not had a corrupt GPT header at this time.I rebooted the server and then I got the ZFS: i/o error - all block copies unavailable error message... (with da0 and da1 and only da0)

I also tried to import the pool on macOS with openZFS

sudo zpool import -f zroot -o readonly=on

Code:

cannot import 'zroot': no such device in pool

Destroy and re-create the pool from a backup source.and

sudo zpool import -F

Code:

pool: zroot

id: 7697653246985412200

state: UNAVAIL

status: The pool was last accessed by another system.

action: The pool cannot be imported due to damaged devices or data.

see: http://zfsonlinux.org/msg/ZFS-8000-EY

config:

zroot UNAVAIL insufficient replicas

media-8C776A20-7224-11E8-98B2-E8393529C350 ONLINE

da1p3 UNAVAIL cannot openI booted the server from a 11.2-RELEASE USB Stick (Live) and then I tried to import the pool

zpool import -f zpool.Now comes the part I don't fully understand.

It shows that the primary GPT table is corrupt or invalid, but when I check with

gpart show da0 it doesn't show me that the there is an corrupt GPT table...Thanks in advance