My symptoms are typical and my error not uncommon: failure to update my bootloader after an update and before rebooting. There's plenty of advice, but before I hose something worse I'm hoping someone can verify the correct steps to fix and then to prevent future hassles from updating and then failing to performing the needed bootloader incantations to avoid future hassles with these modern UEFI thangs.

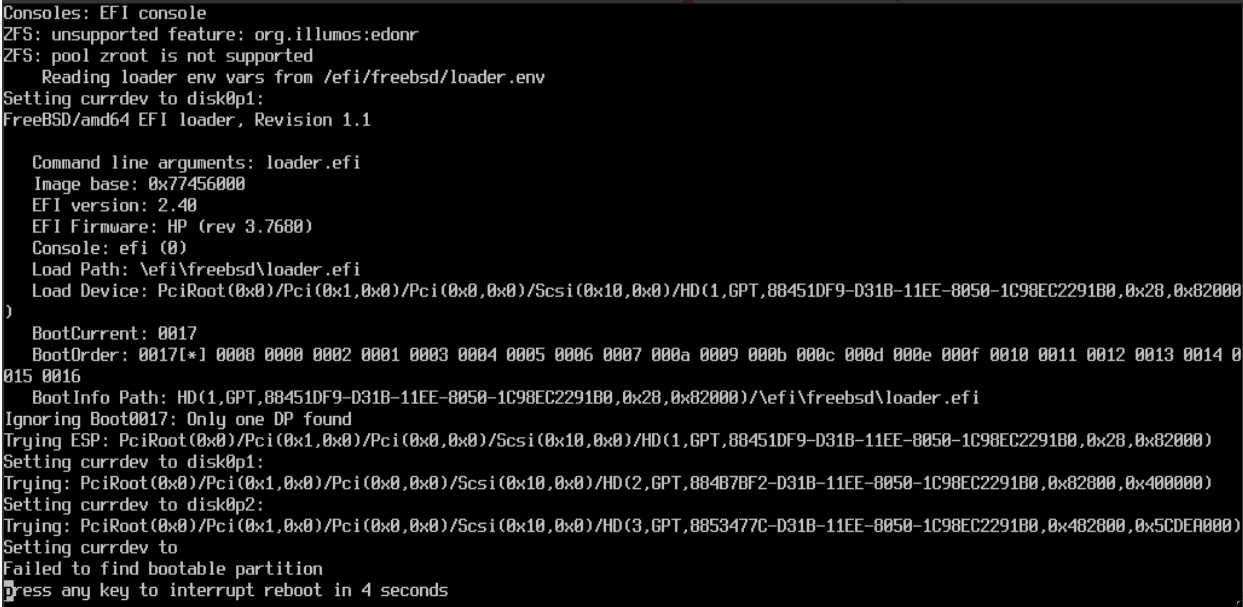

On reboot, I got what appears to be from the searchengining a fairly standard unhappy screen:

After downloading the FreeBSD-14.0-RELEASE-amd64-bootonly.iso and booting to it via iLO, I could proceed with

partition.

I reviewed what appears to be the most current canonical how-to Emrion thread: https://forums.freebsd.org/threads/update-of-the-bootcodes-for-a-gpt-scheme-x64-architecture.80163/ and if I read right, the following incantations (repeated for all 8 drives) should be something like:

Then if I'm reading correctly, the corrective commands should be (and the advice is to do both, at least for FreeBSD boot-only systems):

and

Easy enough so far, but here's where I'm having a moment of doubt and insecurity - before copying I checked the checksums with

The checksums are all already identical. I mean no harm in copying but what would this do? I'm paralyzed by checksum induced uncertainty, as is so often the case these days.

I see SirDice' s comment in this post about regenerating the efi boot manager with

my system not being dual/multi-boot with other OSes on it, also:

And being sure, from now on, to be sure to update the bootloader after any OS or ZFS update of a GPT-UFEI-FreeBSD 14 system before rebooting with:

my system not being dual/multi-boot with other OSes on it, also:

On reboot, I got what appears to be from the searchengining a fairly standard unhappy screen:

(note, this is the raw OCR with 0/8 and y/g and probably other weird substitions)

Consoles: EFI console

ZFS: unsupported feature: org.illumos:edonr

ZFS: pool zroot is not supported

Reading loader env vars from lefi/freebsd/loader.env

Setting currdev to disk8p1:

FreeBSD/amd64 EFI loader, Revision 1.1

Command line arguments: loader.efi

Image base: 8x77456888

EFI version: 2.48

EFI Firmware: HP (rev 3.7688)

Console: efi (8)

Load Path: \efi\freebsd\loader.efi

Load Device: PciRoot(8x8)ci(8X1,8x8)/Pci(8x8,8x8)/Scsi(8x18,8x8)/HD(1,GPT,88451DF9-0313-11EE-8858-1898E8229188,8x28,8x82888

)

BootCurrent: 8817

BootUrder: 8817[*] 8888 8888 8882 8881 8883 8884 8885 8886 8887 888a 8889 888b 8880 888d 8889 888f 8818 8811 8812 8813 8814 8

815 8816

Bootlnfo Path: HD(1,GPT,884510F9—0313—11EE—8858—1898E82291B8,8x28,8x82888)l\efi\freebsd\loader.efi

Ignoring Boot8817: Unlg one DP found

Trying ESP: PciR00t(8x8)/Pci(8x1,8x8)/Pci(8x8,8x8)/Scsi(8x18,8x8)/H0(1,BPT,88451DF9—0318-11EE-8858—1898E8229138,8x28,8x82888)

Setting currdev t0 disk8p1:

Trging: PciRoot(8x8)/Pci(8x1,8x8)/Pci(8x8,8x8)/Scsi(8x18,8x8)/HD(2,GPT,884B7BF2—031B—11EE—8858-1898E8229188,8x82888,8x488888)

Setting currdev to disk8p2:

Trying: PciRoot(8x8)/Pci(8x1,8x8)/Pci(8x8,8x8)/Scsi(8x18,8x8)/HD(3,GPT,88534778—031B-11EE—8858-1898E82291B8,8x482888,8XSCDEH888)

Setting currdev to

Failed to find bootable partition

Press any key to interrupt reboot in 4 seconds

Consoles: EFI console

ZFS: unsupported feature: org.illumos:edonr

ZFS: pool zroot is not supported

Reading loader env vars from lefi/freebsd/loader.env

Setting currdev to disk8p1:

FreeBSD/amd64 EFI loader, Revision 1.1

Command line arguments: loader.efi

Image base: 8x77456888

EFI version: 2.48

EFI Firmware: HP (rev 3.7688)

Console: efi (8)

Load Path: \efi\freebsd\loader.efi

Load Device: PciRoot(8x8)ci(8X1,8x8)/Pci(8x8,8x8)/Scsi(8x18,8x8)/HD(1,GPT,88451DF9-0313-11EE-8858-1898E8229188,8x28,8x82888

)

BootCurrent: 8817

BootUrder: 8817[*] 8888 8888 8882 8881 8883 8884 8885 8886 8887 888a 8889 888b 8880 888d 8889 888f 8818 8811 8812 8813 8814 8

815 8816

Bootlnfo Path: HD(1,GPT,884510F9—0313—11EE—8858—1898E82291B8,8x28,8x82888)l\efi\freebsd\loader.efi

Ignoring Boot8817: Unlg one DP found

Trying ESP: PciR00t(8x8)/Pci(8x1,8x8)/Pci(8x8,8x8)/Scsi(8x18,8x8)/H0(1,BPT,88451DF9—0318-11EE-8858—1898E8229138,8x28,8x82888)

Setting currdev t0 disk8p1:

Trging: PciRoot(8x8)/Pci(8x1,8x8)/Pci(8x8,8x8)/Scsi(8x18,8x8)/HD(2,GPT,884B7BF2—031B—11EE—8858-1898E8229188,8x82888,8x488888)

Setting currdev to disk8p2:

Trying: PciRoot(8x8)/Pci(8x1,8x8)/Pci(8x8,8x8)/Scsi(8x18,8x8)/HD(3,GPT,88534778—031B-11EE—8858-1898E82291B8,8x482888,8XSCDEH888)

Setting currdev to

Failed to find bootable partition

Press any key to interrupt reboot in 4 seconds

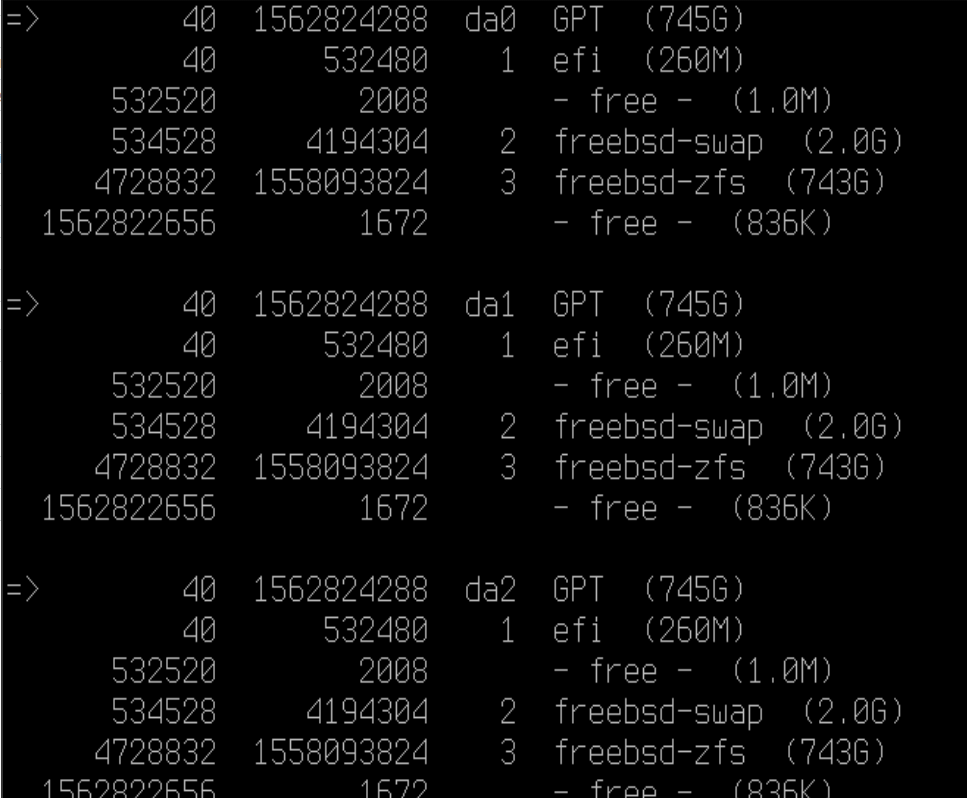

After downloading the FreeBSD-14.0-RELEASE-amd64-bootonly.iso and booting to it via iLO, I could proceed with

gpart show to enumerate my disk structure - GPT and and EFI partition and no freebsd-boot partitionpartition.

I reviewed what appears to be the most current canonical how-to Emrion thread: https://forums.freebsd.org/threads/update-of-the-bootcodes-for-a-gpt-scheme-x64-architecture.80163/ and if I read right, the following incantations (repeated for all 8 drives) should be something like:

mount -t msdosfs /dev/da0p1 /mnt (adjusting the canonical for the naming structure for my drives "ad0p1" -> "da0p1")Then if I'm reading correctly, the corrective commands should be (and the advice is to do both, at least for FreeBSD boot-only systems):

cp /boot/loader.efi /mnt/efi/boot/bootx64.efiand

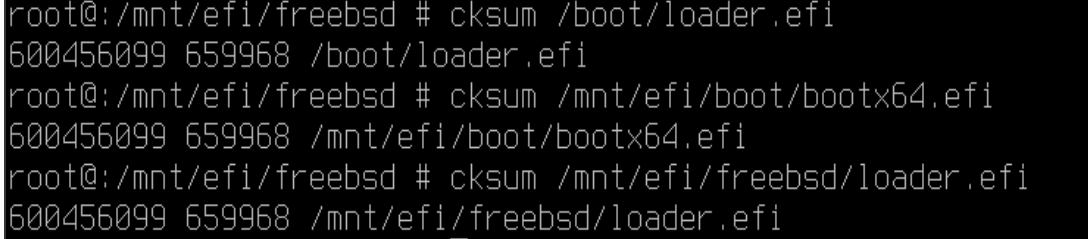

cp /boot/loader.efi /mnt/efi/freebsd/loader.efiEasy enough so far, but here's where I'm having a moment of doubt and insecurity - before copying I checked the checksums with

cksum and:The checksums are all already identical. I mean no harm in copying but what would this do? I'm paralyzed by checksum induced uncertainty, as is so often the case these days.

I see SirDice' s comment in this post about regenerating the efi boot manager with

efibootmgr, which sounds promising but a different procedure than recommended in the most referenced "how to unbork your update mistake" thread - and would that command be, when booting from removable media something like: mount -t msdosfs /dev/da0p1 /mnt efibootmgr -a -c -l /mnt/efi/freebsd/loader.efi -L FreeBSD-14my system not being dual/multi-boot with other OSes on it, also:

efibootmgr -a -c -l /mnt/efi/boot/bootx64.efi -L FreeBSD-14?And being sure, from now on, to be sure to update the bootloader after any OS or ZFS update of a GPT-UFEI-FreeBSD 14 system before rebooting with:

cp /boot/loader.efi /boot/efi/efi/freebsd/loader.efimy system not being dual/multi-boot with other OSes on it, also:

cp /boot/loader.efi /boot/efi/efi/boot/bootx64.efi